Introduction to PyMC3¶

Prerequisite - Course 2¶

Attribution¶

It is important to acknowledge the authors who have put together fantastic resources that have allowed me to make this notebook possible.

The majority of the the examples here are taken from the book ‘Introduction to statistical modeling and probabilistic programming using PyMC3 and ArviZ’, Second Edition by Osvaldo Martin

PyMC3 website

Bayesian Methods for Hackers by Davidson-Pilon Cameron

Doing Bayesian Data Analysis by John Kruschke

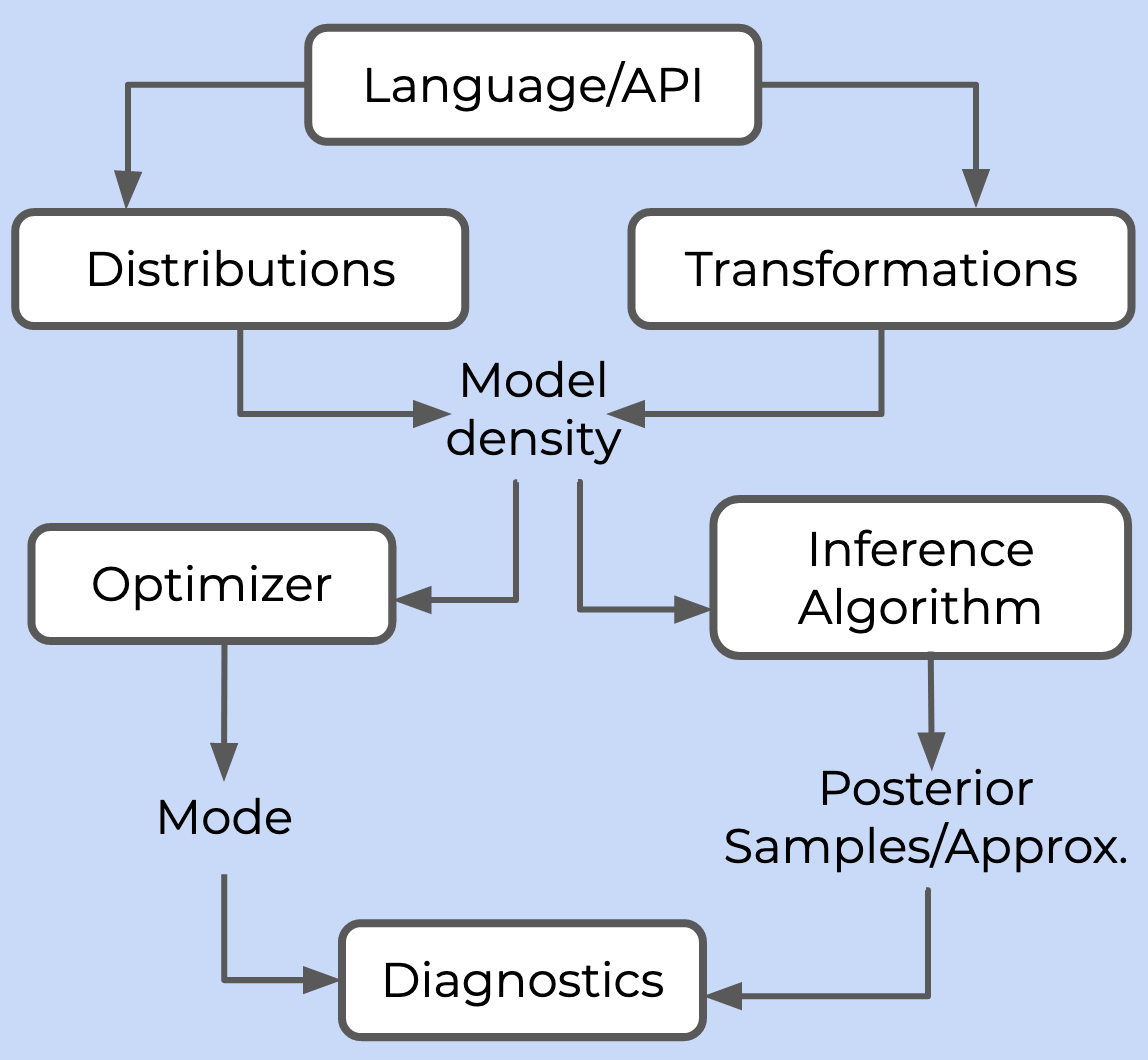

Overview of Probabilistic Programming¶

An overview of probabilistic frameworks is given in this post by George Ho, one of the developers of PyMC3. He outlines the components needed for a probabilistic framework in this figure

What is PyMC3?¶

PyMC3 is a probabilistic programming framework for performing Bayesian modeling and visualization. It uses Theano as a backend. It has algorithms to perform Monte Carlo simulation as well as Variational Inference. It also has a diagnostic visualization tool called ArViz.

It can be used to infer values of parameters of models that we are unsure about by utilizing the observed data. A good example is given here https://docs.pymc.io/notebooks/ODE_API_introduction.html.

We are trying to estimate the parameters of air resistance (\(\gamma\)) from the Ordinary Differential Equation (ODE) of freefall. We have an understanding of the physics behind freefall as represented by the ODE and we have observed/measured some of the variables such as mass (m), position (y) and velocity (\(\dfrac{dy}{dt}\)) but we don’t know what the parameter of air resistance is here. We can use PyMC3 to perform inference and give us a distribution of potential values of air resistance. A key point to note here is that the more information we have regarding other variables, the more certainty we have in our desired variable (air resistance). Suppose we are unsure about the gravitational constant (g) used in the ODE (implemented by specifying a prior distribution as opposed to a constant value of 9.8), we get more uncertainty in the air resistance variable as well.

General Structure of PyMC3¶

It consists of phenomena represented by equations made up of random variables and deterministic variables. The random variables can be divided into observed variables and unobserved variables. The observed variables are those for which we have data and the unobserved variables are those for which we have to specify a prior distribution.

Observed Variables¶

with pm.Model():

obs = pm.Normal('x', mu=0, sd=1, observed=np.random.randn(100))

Unobserved Variables¶

with pm.Model():

x = pm.Normal('x', mu=0, sd=1)

We will look at an example of Linear Regression to illustrate the fundamental features of PyMC3.

An example with Linear Regression¶

The example below illustrates linear regression with a single output variable and two input variables.

import pymc3

pymc3.__version__

'3.10.0'

Generate the Data¶

%matplotlib inline

import arviz as az

import numpy as np

import warnings

warnings.filterwarnings("ignore")

import matplotlib.pyplot as plt

import graphviz

import os

os.environ['OMP_NUM_THREADS'] = '4'

# Initialize random number generator

np.random.seed(123)

# True parameter values

alpha, sigma = 1, 1

beta = [1, 2.5]

# Size of dataset

size = 100

# Predictor variable

X1 = np.linspace(0, 1, size)

X2 = np.linspace(0,.2, size)

# Simulate outcome variable

Y = alpha + beta[0]*X1 + beta[1]*X2 + np.random.randn(size)*sigma

import pymc3 as pm

from pymc3 import Model, Normal, HalfNormal

from pymc3 import find_MAP

Model Setup in PyMC3¶

basic_model = Model()

with basic_model:

# Priors for unknown model parameters

alpha = Normal('alpha', mu=0, sd=5)

beta = Normal('beta', mu=0, sd=5, shape=2)

sigma = HalfNormal('sigma', sd=4)

# Expected value of outcome

mu = alpha + beta[0]*X1 + beta[1]*X2

# Deterministic variable, to have PyMC3 store mu as a value in the trace use

# mu = pm.Deterministic('mu', alpha + beta[0]*X1 + beta[1]*X2)

# Likelihood (sampling distribution) of observations

Y_obs = Normal('Y_obs', mu=mu, sd=sigma, observed=Y)

pm.model_to_graphviz(basic_model)

Plate Notation¶

A way to graphically represent variables and their interactions in a probabilistic framework.

MAP Estimate¶

PyMC3 computes the MAP estimate using numerical optimization, by default using the BFGS algorithm. These provide a point estimate which may not be accurate if the mode does not appropriately represent the distribution.

map_estimate = find_MAP(model=basic_model, maxeval=10000)

map_estimate

logp = -164.27, ||grad|| = 3.5988: 100%|██████████| 20/20 [00:00<00:00, 3204.69it/s]

{'alpha': array(1.0154193),

'beta': array([1.46379432, 0.29275886]),

'sigma_log__': array(0.12023688),

'sigma': array(1.12776396)}

Inference in PyMC3¶

from pymc3 import NUTS, sample

from scipy import optimize

with basic_model:

# obtain starting values via MAP

start = find_MAP(fmin=optimize.fmin_powell)

# instantiate sampler - not really a good practice

step = NUTS(scaling=start)

# draw 2000 posterior samples

trace = sample(2000, step, start=start)

Optimization terminated successfully.

Current function value: 163.261934

Iterations: 5

Function evaluations: 230

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, beta, alpha]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 46 seconds.

The number of effective samples is smaller than 25% for some parameters.

You can also pass a parameter to step that indicates the type of sampling algorithm to use such as

Metropolis

Slice sampling

NUTS

PyMC3 can automatically determine the most appropriate algorithm to use here, so it is best to use the default option.

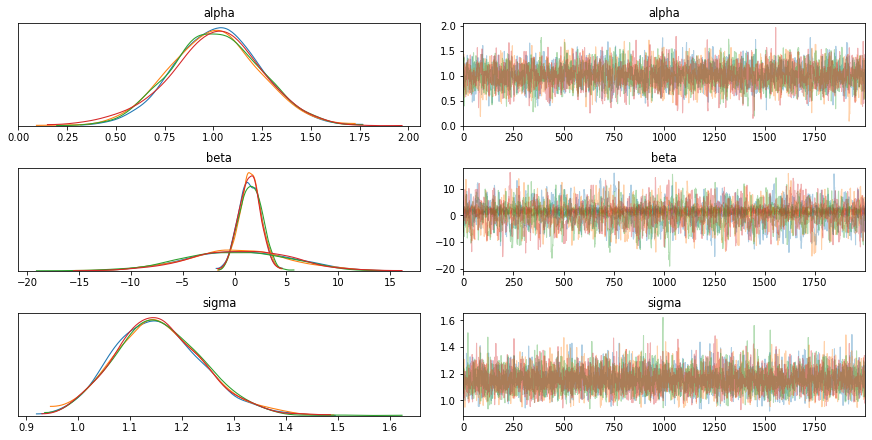

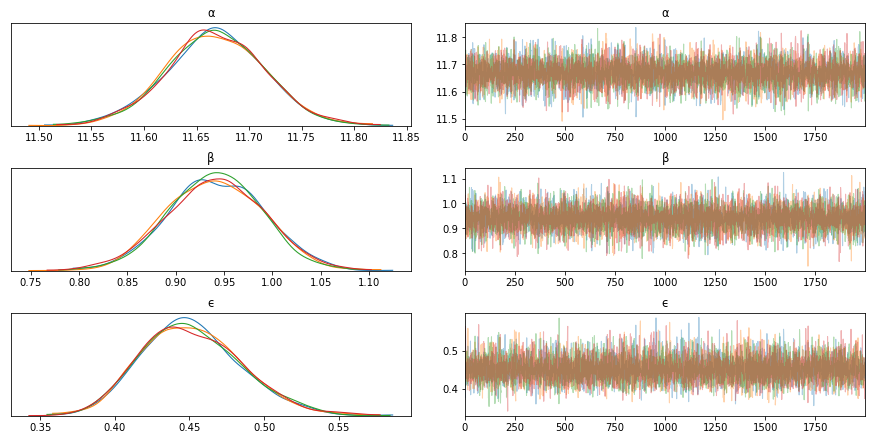

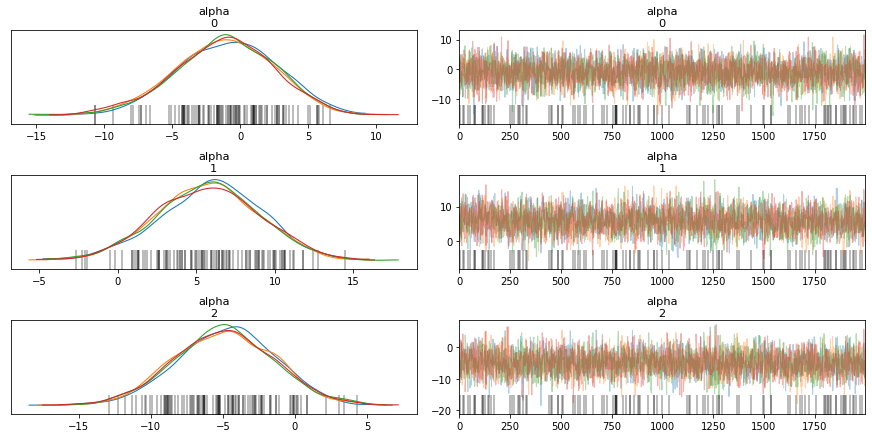

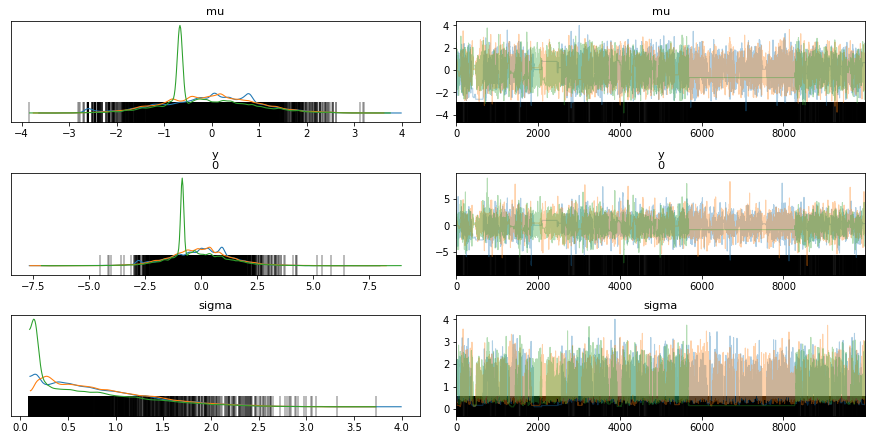

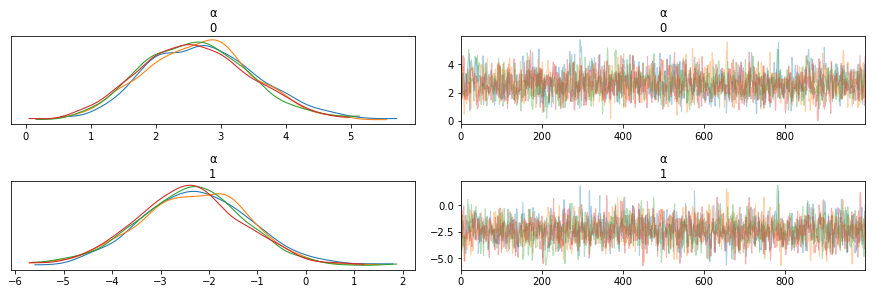

Distribution Information through Traceplots¶

trace['alpha']

array([1.17257076, 0.66991082, 1.26713518, ..., 1.3967013 , 1.2705309 ,

1.10360491])

from pymc3 import traceplot

traceplot(trace)

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc311abd580>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d4510d0>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30dfba4f0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc311d11880>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d086f40>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc311b21cd0>]],

dtype=object)

We will look at the summary of the sampling process. The columns will be explained as we progress through this course.

az.summary(trace)

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha | 1.008 | 0.229 | 0.557 | 1.419 | 0.004 | 0.003 | 3976.0 | 3976.0 | 4009.0 | 3701.0 | 1.0 |

| beta[0] | 1.482 | 1.044 | -0.438 | 3.441 | 0.028 | 0.020 | 1426.0 | 1426.0 | 1425.0 | 1760.0 | 1.0 |

| beta[1] | 0.264 | 4.847 | -8.781 | 9.343 | 0.130 | 0.092 | 1397.0 | 1397.0 | 1398.0 | 2042.0 | 1.0 |

| sigma | 1.155 | 0.085 | 0.993 | 1.312 | 0.001 | 0.001 | 7311.0 | 7125.0 | 7574.0 | 6080.0 | 1.0 |

from pymc3 import summary

summary(trace)

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha | 1.008 | 0.229 | 0.557 | 1.419 | 0.004 | 0.003 | 3976.0 | 3976.0 | 4009.0 | 3701.0 | 1.0 |

| beta[0] | 1.482 | 1.044 | -0.438 | 3.441 | 0.028 | 0.020 | 1426.0 | 1426.0 | 1425.0 | 1760.0 | 1.0 |

| beta[1] | 0.264 | 4.847 | -8.781 | 9.343 | 0.130 | 0.092 | 1397.0 | 1397.0 | 1398.0 | 2042.0 | 1.0 |

| sigma | 1.155 | 0.085 | 0.993 | 1.312 | 0.001 | 0.001 | 7311.0 | 7125.0 | 7574.0 | 6080.0 | 1.0 |

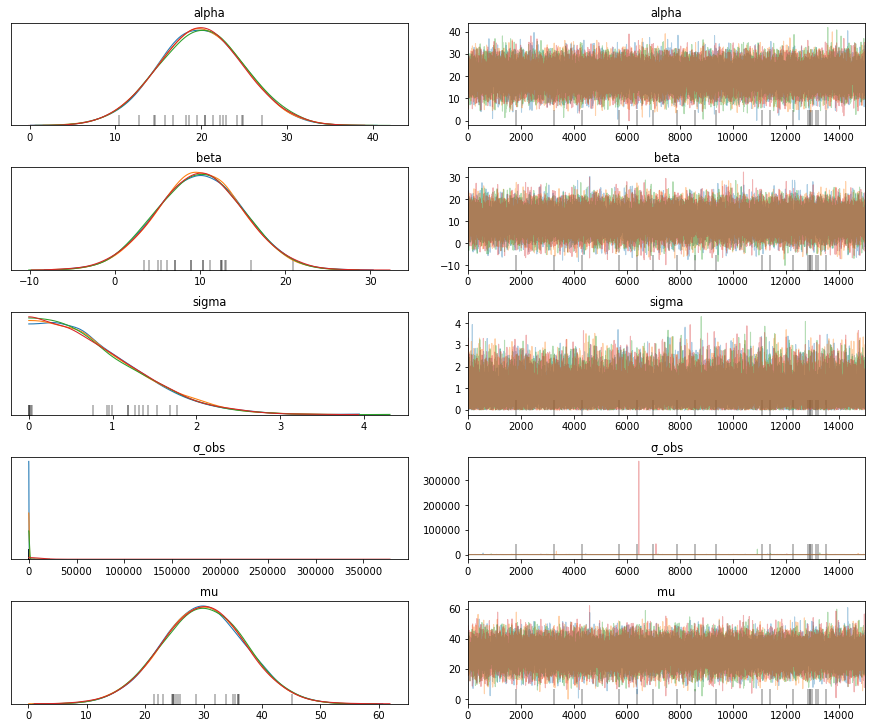

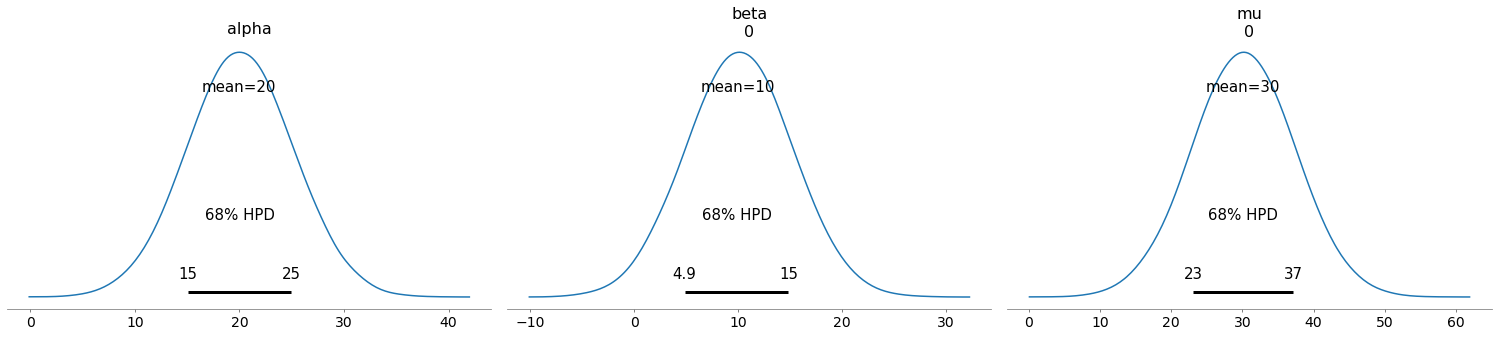

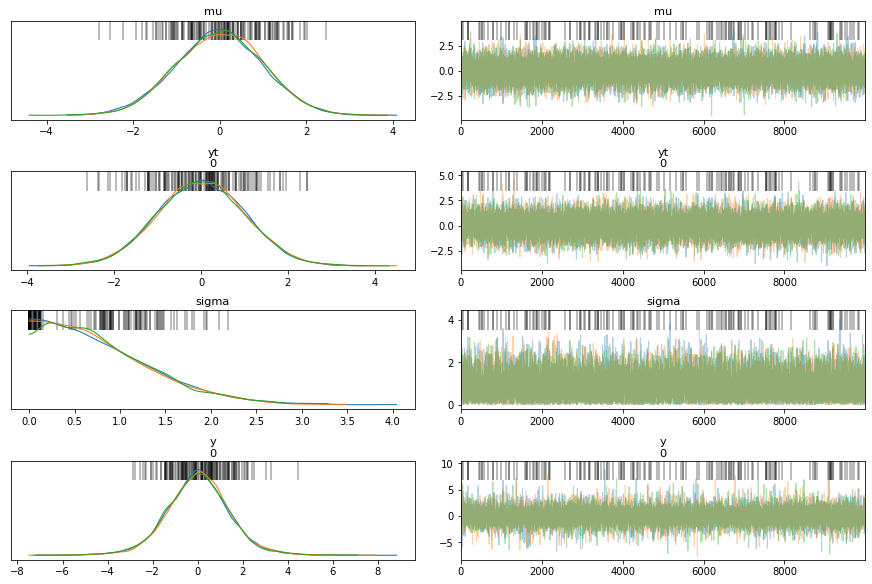

Composition of Distributions for Uncertainty¶

You can do the same without observations, to perform computations and get uncertainty quantification. In this example add two normally distributed variables to get another normally distributed variable. By definition

basic_model2 = Model()

with basic_model2:

# Priors for unknown model parameters

alpha = Normal('alpha', mu=20, sd=5)

beta = Normal('beta', mu=10, sd=5, shape=1)

# These aren't used in the calculation, but please experiment by composing various combinations

# of these function for calculating mu

sigma = HalfNormal('sigma', sd=1)

σ_obs = pm.HalfCauchy("σ_obs", beta=1, testval=0.1)

# Expected value of outcome

mu = pm.Deterministic('mu', alpha + beta)

trace = sample(15000)

print(trace['mu'])

traceplot(trace)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [σ_obs, sigma, beta, alpha]

Sampling 4 chains, 19 divergences: 100%|██████████| 62000/62000 [00:15<00:00, 4058.26draws/s]

There were 15 divergences after tuning. Increase `target_accept` or reparameterize.

There were 4 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.882730362833375, but should be close to 0.8. Try to increase the number of tuning steps.

[[32.09431669]

[32.25815932]

[32.85080701]

...

[34.50857964]

[26.83640328]

[35.88096126]]

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30e1681f0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30cc22a90>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d6334c0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30da4a2e0>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d729af0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d6444c0>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30d5fca60>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc308e066d0>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30b113be0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7fc30bafdf10>]],

dtype=object)

# Note the mean and standard deviation of the variable mu. We didn't need observations to compute this uncertainty.

try:

# older syntax

pm.plot_posterior(trace, var_names=['alpha', 'beta', 'mu'], credible_interval=0.68)

except:

pm.plot_posterior(trace, var_names=['alpha', 'beta', 'mu'], hdi_prob=0.68)

GRADED EVALUATION (5 mins)¶

What type of algorithms does PyMC3 support?

a. MCMC

b. Variational Inference

c. Both

We can mix Deterministic and Probabilistic variables in PyMC3

a. True

b. False

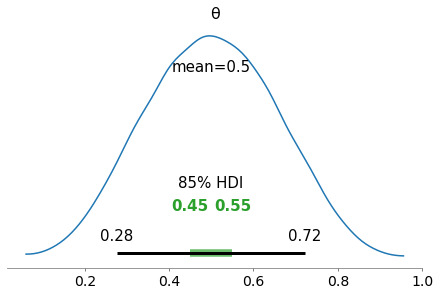

HPD, Credible Interval, HDI and ROPE¶

What is it used for?¶

HDI, HPD and ROPE are essentially used for making decisions from the posterior distribution.

HPD and Credible Interval¶

For example, if we plot the posterior of a beta distribution with some parameters, the credible interval for the Highest Posterior Density (HPD) is the shortest interval that has the given probability indicated by the HPD. This interval is also called the HPD interval. As the name indicates, this involves regions of the highest posterior probability density. For unimodal distributions, this includes the mode.

HDI¶

A related term is the Highest Density Interval (HDI) which is a more general term that can apply for any distribution such as a prior and not just the posterior. In other words a posterior’s HDI is called the HPD interval.

As an example, if we suspect that the dice used at a casino is loaded, we can infer the probability of getting the value 3 from the six possible outcomes. Ideally, this should be 1/6 = 0.16666. If this happens to fall in the HPD interval, we can assume that the dice is fair however it may be that the distribution may be biased to one side or the other.

ROPE¶

What is the probability of getting a value given by x? We can’t really calculate this exactly but we can compute this probability within a range given by x + \(\Delta\)x, x - \(\Delta\)x.

Sometimes, instead of looking at the probability that x = 0.16666, we look at the probability that it falls within the range 0.12 and 0.20. This range is called the Region of Practical Equivalence or ROPE. This implies that, based on our subjective opinion, getting a value between 0.12 and 0.20 is practically equivalent to getting a 0.16666. Hence, we can assume that the dice is fair given any value within this range. ROPE allows us to make decisions about an event from an inferred posterior distribution. After computing the posterior, the ROPE given by 0.12 and 0.20 can either overlap with the HPD (of getting a 3)

completely

not overlap at all

partially overlap with the HPD

Complete overlap suggests that our computed probability coincides with what we would expect from a fair dice. If it does not overlap, it is not a fair dice and a partial overlap indicates that we cannot be certain that is either fair or unfair.

In short, we define a ROPE based on our subject matter expertise and compare it to the HPD to make a decision from the posterior distribution.

Credible intervals vs. Confidence Intervals¶

This deserves special mention particularly due to the subtle differences stemming from the Bayesian (credible intervals) vs. Frequentist (confidence intervals) approaches involved. Bayesians consider the parameters to be a distribution, and for them there is no true parameter. However, Frequentists fundamentally assume that there exists a true parameter.

Confidence intervals quantify our confidence that the true parameter exists in this interval. It is a statement about the interval.

Credible intervals quantify our uncertainty about the parameters since there are no true parameters in a Bayesian setting. It is a statement about the probability of the parameter.

For e.g. if we are trying to estimate the R0 for COVID-19, one could say that we have a 95% confidence interval of the true R0 being between 2.0 and 3.2. In a Bayesian setting, the 95% credible interval of (2, 3.2) implies that 95% of the possible R0 values fall between 2.0 and 3.2.

We will see how we can visualize the following using ArViz

HDI (Black lines)

ROPE (Green lines)

import numpy as np

from scipy import stats as stats

np.random.seed(1)

try:

az.plot_posterior({'θ':stats.beta.rvs(5, 5, size=20000)},

credible_interval=0.75, # defaults to 94%

#hdi_prob = 0.85,

rope =[0.45, 0.55])

except:

az.plot_posterior({'θ':stats.beta.rvs(5, 5, size=20000)},

#credible_interval=0.75, # defaults to 94%

hdi_prob = 0.85, # credible_interval is deprecated, use hdi_prob

rope =[0.45, 0.55])

array([<AxesSubplot:title={'center':'θ'}>], dtype=object)

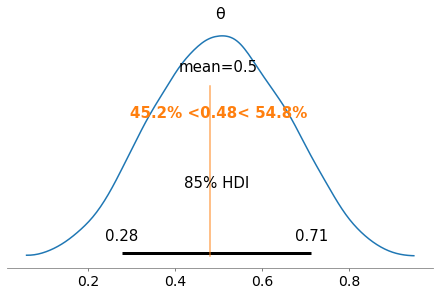

Another way to do this is by plotting a reference value on the posterior. Below, a reference value of 0.48 is used and it can be seen that 45.2% of the posterior is below this value while 54.8% of the posterior is above this value. If we were estimating our parameter to have a value of 0.48, this suggests a good fit but with a slight right bias or in other words that the parameter is likely to have a value greater than 0.48.

try:

az.plot_posterior({'θ':stats.beta.rvs(5, 5, size=20000)},

credible_interval=0.75, # defaults to 94%

#hdi_prob = 0.85, # credible_interval is deprecated, use hdi_prob

ref_val=0.48)

except:

az.plot_posterior({'θ':stats.beta.rvs(5, 5, size=20000)},

#credible_interval=0.75, # defaults to 94%

hdi_prob = 0.85, # credible_interval is deprecated, use hdi_prob

ref_val=0.48)

array([<AxesSubplot:title={'center':'θ'}>], dtype=object)

Graded Evaluation (15 min)¶

HDI and HPD are the same

a. True

b. False

HPD is used for making decisions from the posterior distribution

a. True

b. False

ROPE is a subjective but informed interval to help make decisions from the posterior distribution

a. True

b. False

In order to confirm our hypothesis that we have the right estimate for our parameter, we want our ROPE and the HPD to have

a. complete overlap

b. partial overlap

c. no overlap

A reference value can be used to indicate tge direction of bias in our posterior dsitribution

a. True

b. False

Modeling with a Gaussian Distribution¶

Gaussians (Normal distributions) are normally used to approximate a lot of practical data distributions. Some of the reasons for this are:

The Central Limit Theorem, which states:

The distribution of the sample means will be a normal distributionIntuitive explanation of the Central Limit Theorem

which implies that if we take the mean of the sample means, we should get the true population mean.

A more subtle reason for adopting the Gaussian distribution to represent a lot of phenomena is the fact that a lot of these phenomena themselves are a result of averages of varying factors.

Mathematical tractability of the distribution - it is easy to compute in closed form. While not every distribution can be approximated with a single Gaussian distribution, we can use a mixture of Gaussians to represent other multi-modal distributions.

The probability density for a Normal distribution in a single dimension is given by:

\(p(x) = \dfrac{1}{\sigma \sqrt{2 \pi}} e^{-(x - \mu)^2 / 2 \sigma^2}\)

where \(\mu\) is the mean and \(\sigma\) is the standard deviation. In higher dimensions, we have a vector of means and a covariance matrix.

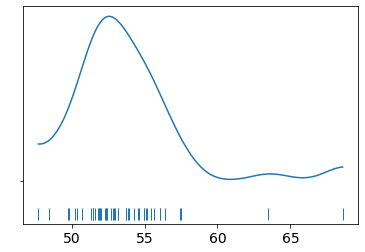

Example with PyMC3¶

We read the chemical shifts data, and plot the density to get an idea of the data distribution. It looks somewhat like a Gaussian so maybe we can start there. We have two parameters to infer, that is the mean and the standard deviation. We can estimate a prior for the mean by looking at the density and putting some bounds using a uniform prior. The standard deviation is however chosen to have a mean-centered half-normal prior (half-normal since the standard deviation cannot be negative). We can provide a hyperparameter for this by inspecting the density again. These values decide how well we converge to a solution so good values are essential for good results.

data = np.loadtxt('data/chemical_shifts.csv')

az.plot_kde(data, rug=True)

plt.yticks([0], alpha=0)

([<matplotlib.axis.YTick at 0x7fc30d00f160>],

<a list of 1 Text major ticklabel objects>)

data

array([55.12, 53.73, 50.24, 52.05, 56.4 , 48.45, 52.34, 55.65, 51.49,

51.86, 63.43, 53. , 56.09, 51.93, 52.31, 52.33, 57.48, 57.44,

55.14, 53.93, 54.62, 56.09, 68.58, 51.36, 55.47, 50.73, 51.94,

54.95, 50.39, 52.91, 51.5 , 52.68, 47.72, 49.73, 51.82, 54.99,

52.84, 53.19, 54.52, 51.46, 53.73, 51.61, 49.81, 52.42, 54.3 ,

53.84, 53.16])

import pymc3 as pm

from pymc3.backends import SQLite, Text

model_g = Model()

with model_g:

#backend = SQLite('test.sqlite')

db = pm.backends.Text('test')

μ = pm.Uniform('μ', lower=40, upper=70)

σ = pm.HalfNormal('σ', sd=10)

y = pm.Normal('y', mu=μ, sd=σ, observed=data)

trace_g = pm.sample(draws=1000) # backend = SQLite('test.sqlite') - Does not work

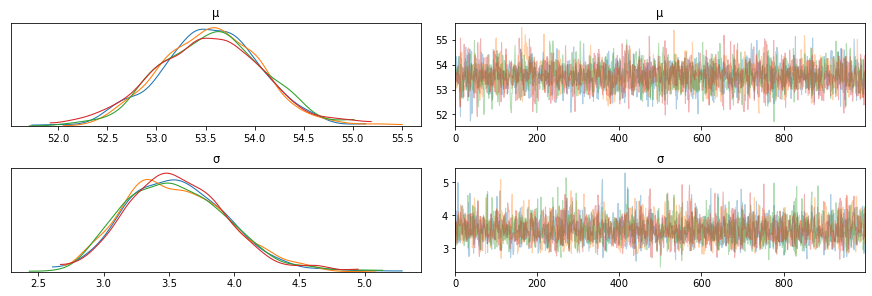

az.plot_trace(trace_g)

pm.model_to_graphviz(model_g)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [σ, μ]

Sampling 4 chains, 0 divergences: 100%|██████████| 6000/6000 [00:03<00:00, 1602.44draws/s]

Note on scalablility¶

If your trace information is too big as a result of too many variables or the model being large, you do not want to store this in memory since it can overrun the machine memory. Persisting this in a DB will allow you to reload it and inspect it at a later time as well. For each run, it appends the samples to the DB (or file if not deleted).

help(pm.backends)

Help on package pymc3.backends in pymc3:

NAME

pymc3.backends - Backends for traces

DESCRIPTION

Available backends

------------------

1. NumPy array (pymc3.backends.NDArray)

2. Text files (pymc3.backends.Text)

3. SQLite (pymc3.backends.SQLite)

The NDArray backend holds the entire trace in memory, whereas the Text

and SQLite backends store the values while sampling.

Selecting a backend

-------------------

By default, a NumPy array is used as the backend. To specify a different

backend, pass a backend instance to `sample`.

For example, the following would save the sampling values to CSV files

in the directory 'test'.

>>> import pymc3 as pm

>>> with pm.Model():

>>> db = pm.backends.Text('test')

>>> trace = pm.sample(..., trace=db)

Note that as in the example above, one must have an active model context,

or pass a `model` parameter in order to create a backend.

Selecting values from a backend

-------------------------------

After a backend is finished sampling, it returns a MultiTrace object.

Values can be accessed in a few ways. The easiest way is to index the

backend object with a variable or variable name.

>>> trace['x'] # or trace.x or trace[x]

The call will return the sampling values of `x`, with the values for

all chains concatenated. (For a single call to `sample`, the number of

chains will correspond to the `cores` argument.)

To discard the first N values of each chain, slicing syntax can be

used.

>>> trace['x', 1000:]

The `get_values` method offers more control over which values are

returned. The call below will discard the first 1000 iterations

from each chain and keep the values for each chain as separate arrays.

>>> trace.get_values('x', burn=1000, combine=False)

The `chains` parameter of `get_values` can be used to limit the chains

that are retrieved.

>>> trace.get_values('x', burn=1000, chains=[0, 2])

MultiTrace objects also support slicing. For example, the following

call would return a new trace object without the first 1000 sampling

iterations for all traces and variables.

>>> sliced_trace = trace[1000:]

The backend for the new trace is always NDArray, regardless of the

type of original trace. Only the NDArray backend supports a stop

value in the slice.

Loading a saved backend

-----------------------

Saved backends can be loaded using `load` function in the module for the

specific backend.

>>> trace = pm.backends.text.load('test')

Writing custom backends

-----------------------

Backends consist of a class that handles sampling storage and value

selection. Three sampling methods of backend will be called:

- setup: Before sampling is started, the `setup` method will be called

with two arguments: the number of draws and the chain number. This is

useful setting up any structure for storing the sampling values that

require the above information.

- record: Record the sampling results for the current draw. This method

will be called with a dictionary of values mapped to the variable

names. This is the only sampling function that *must* do something to

have a meaningful backend.

- close: This method is called following sampling and should perform any

actions necessary for finalizing and cleaning up the backend.

The base storage class `backends.base.BaseTrace` provides common model

setup that is used by all the PyMC backends.

Several selection methods must also be defined:

- get_values: This is the core method for selecting values from the

backend. It can be called directly and is used by __getitem__ when the

backend is indexed with a variable name or object.

- _slice: Defines how the backend returns a slice of itself. This

is called if the backend is indexed with a slice range.

- point: Returns values for each variable at a single iteration. This is

called if the backend is indexed with a single integer.

- __len__: This should return the number of draws.

When `pymc3.sample` finishes, it wraps all trace objects in a MultiTrace

object that provides a consistent selection interface for all backends.

If the traces are stored on disk, then a `load` function should also be

defined that returns a MultiTrace object.

For specific examples, see pymc3.backends.{ndarray,text,sqlite}.py.

PACKAGE CONTENTS

base

hdf5

ndarray

report

sqlite

text

tracetab

FILE

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/pymc3/backends/__init__.py

from pymc3.backends.sqlite import load

with model_g:

#trace = pm.backends.text.load('./mcmc')

trace = pm.backends.sqlite.load('./mcmc.sqlite')

print(len(trace['μ']))

71000

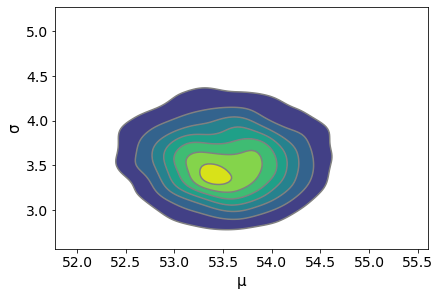

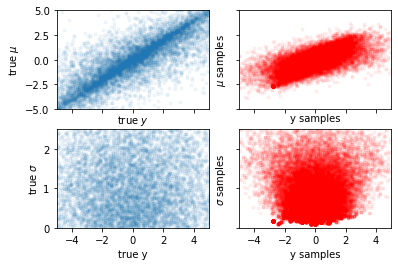

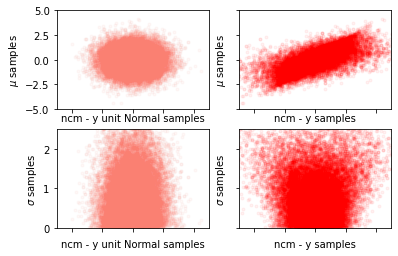

Pairplot for Correlations¶

Use a pairplot of the parameters to ensure that there are no correlations that would adversely affect the sampling process.

az.plot_pair(trace_g, kind='kde', fill_last=False)

<matplotlib.axes._subplots.AxesSubplot at 0x7fc327ebad90>

az.summary(trace_g)

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| μ | 53.524 | 0.544 | 52.504 | 54.513 | 0.010 | 0.007 | 2716.0 | 2716.0 | 2708.0 | 2008.0 | 1.0 |

| σ | 3.564 | 0.389 | 2.877 | 4.320 | 0.007 | 0.005 | 2902.0 | 2755.0 | 3059.0 | 2413.0 | 1.0 |

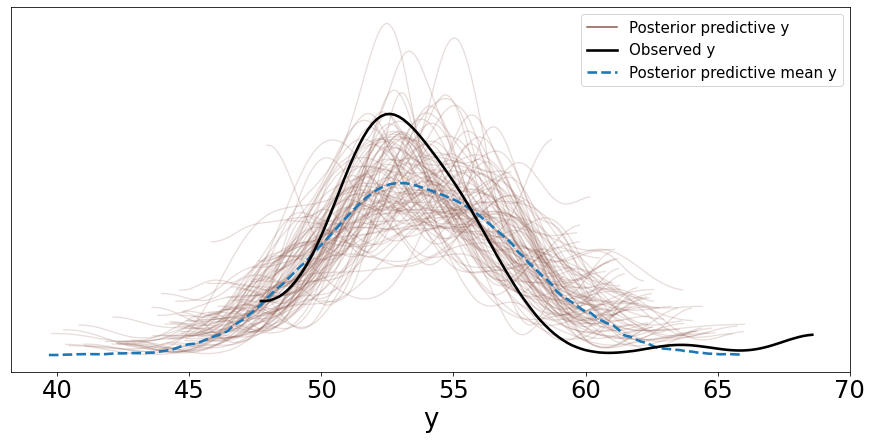

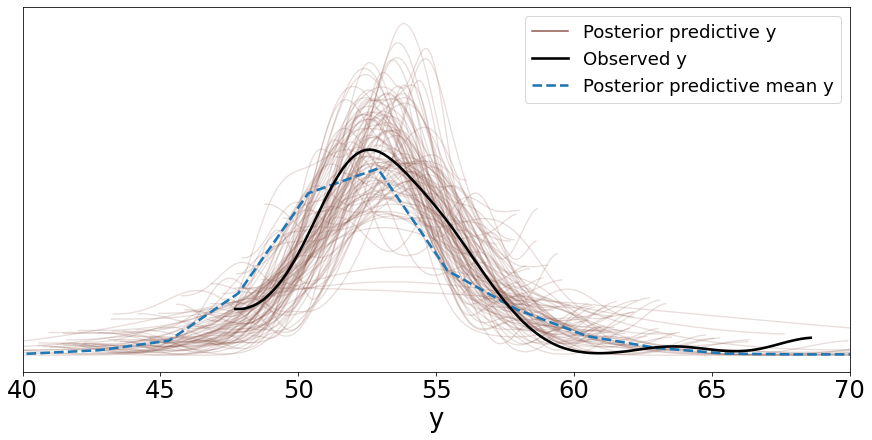

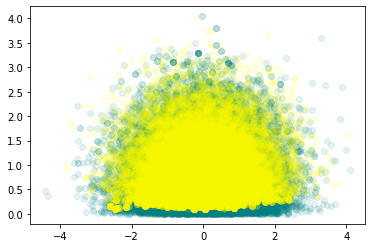

Posterior Predictive Check¶

We can draw samples from the inferred posterior distribution to check to see how they line up with the observed values. Below, we draw 100 samples of length corresponding to that of the data from this posterior. You are returned a dictionary for each of the observed variables in the model.

y_pred_g = pm.sample_posterior_predictive(trace_g, 100, model_g)

print("Shape of the sampled variable y and data ",np.shape(y_pred_g['y']), len(data))

100%|██████████| 100/100 [00:00<00:00, 998.82it/s]

Shape of the sampled variable y and data (100, 47) 47

y_pred_g['y'][0]

array([45.53260993, 54.10289681, 48.66040023, 53.77971592, 56.46850139,

53.04692598, 58.67379494, 53.78900017, 49.40837911, 53.89149333,

50.7769683 , 52.76403229, 56.49493798, 49.45154213, 50.9368833 ,

52.15412225, 53.68402289, 54.5480772 , 55.48493177, 50.47109214,

60.26921123, 63.47612414, 52.57626571, 55.85031574, 57.09879031,

53.60009516, 53.60991658, 57.54224547, 54.01678025, 55.67096381,

53.68052361, 53.62875786, 56.11198038, 48.00436409, 53.1587586 ,

55.4568336 , 54.16217932, 54.45011005, 59.10133838, 52.74692729,

57.09680258, 63.12037032, 56.84681701, 52.67425532, 59.41026027,

49.80051262, 53.30718519])

You can also plot the distribution of these samples by passing this variable ‘y_pred_g’ as shown below. Setting mean=True in the call to plot_ppc computes the mean distribution of the 100 sampled distributions and plots it as well.

data_ppc = az.from_pymc3(trace=trace_g, posterior_predictive=y_pred_g)

ax = az.plot_ppc(data_ppc, figsize=(12, 6), mean=True)

ax[0].legend(fontsize=15)

arviz.data.io_pymc3 - WARNING - posterior predictive shape not compatible with number of chains and draws. This can mean that some draws or even whole chains are not represented.

<matplotlib.legend.Legend at 0x7fc327c15d30>

Two things can be noted here:

The mean distribution of the samples from the posterior predictive distribution is close to the distribution of the observed data but the mean of this mean distribution is slightly shifted to the right.

Also, the variance of the samples; whether we can say qualitatively that this is acceptable or not depends on the problem. In general, the more representative data points available to us, the lower the variance.

Another thing to note here is that we modeled this problem using a Gaussian distribution, however we have some outliers that need to be accounted for which we cannot do well with a Gaussian distribution. We will see below how to use a Student’s t-distribution for that.

Robust Models with a Student’s t-Distribution¶

As mentioned in the previous section, one of the issues with assuming a Gaussian distribution is the assumption of finite variance. When you have observed data that lies outside this ‘boundary’, a Gaussian distribution is not a good fit and PyMC3, and other MCMC-based tools will be unable to reconcile these differences appropriately.

This distribution is parameterized by the following:

μ corresponds to the mean of the distribution

σ is the scale and corresponds roughly to the standard deviation

ν is the degrees of freedom and takes values between 0 and \(\infty\). The degrees of freedom corresponds to the number of independent observations minus 1. When the sample size is 8, the t-distribution used to model this would have degrees of freedom set to 7. A value of 1 corresponds to the Cauchy distribution and indicates heavy tails, while infinity corresponds to a Normal distribution.

The probability density function for a zero-centered Student’s t-distribution with scale set to one is given by:

\(p(t) = \dfrac{\gamma ((v+1) / 2)}{\sqrt{v \pi} \gamma (v/2)} (1 + \dfrac{t^2}{v})^{-(v+1)/2}\)

In this case, the mean of the distribution is 0 and the variance is given by ν/(ν - 2).

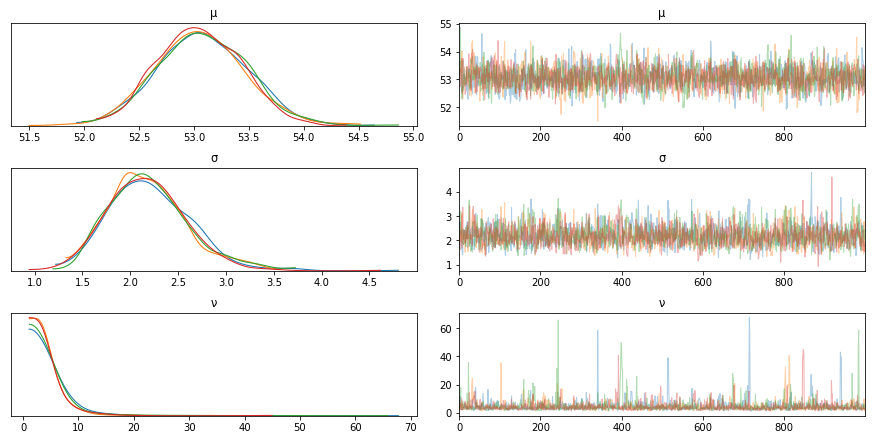

Now let us model the same problem with this distribution instead of a Normal.

with pm.Model() as model_t:

μ = pm.Uniform('μ', 40, 75) # mean

σ = pm.HalfNormal('σ', sd=10)

ν = pm.Exponential('ν', 1/30)

y = pm.StudentT('y', mu=μ, sd=σ, nu=ν, observed=data)

trace_t = pm.sample(1000)

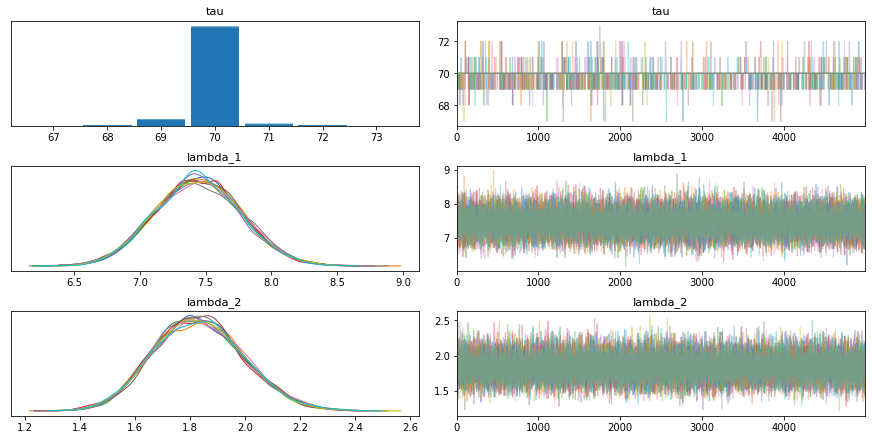

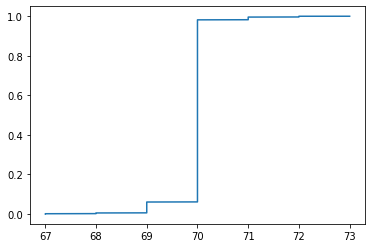

az.plot_trace(trace_t)

pm.model_to_graphviz(model_t)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ν, σ, μ]

Sampling 4 chains, 0 divergences: 100%|██████████| 6000/6000 [00:04<00:00, 1461.34draws/s]

Using a student’s t-distribution we notice that the outliers are captured more accurately now and the model fits better.

# Using a student's t distribution we notice that the outliers are captured more

# accurately now and the model fits better

y_ppc_t = pm.sample_posterior_predictive(

trace_t, 100, model_t, random_seed=123)

y_pred_t = az.from_pymc3(trace=trace_t, posterior_predictive=y_ppc_t)

az.plot_ppc(y_pred_t, figsize=(12, 6), mean=True)

ax[0].legend(fontsize=15)

plt.xlim(40, 70)

100%|██████████| 100/100 [00:00<00:00, 1263.33it/s]

arviz.data.io_pymc3 - WARNING - posterior predictive shape not compatible with number of chains and draws. This can mean that some draws or even whole chains are not represented.

(40.0, 70.0)

Reading - Bayesian Estimation to Determine the Effectiveness of Drugs¶

https://docs.pymc.io/notebooks/BEST.html

Hierarchical Models or Multilevel Models¶

Suppose we want to perform an analysis of water quality in a state and information is available from each district in the state. There are two ways to model this now:

We can study each district separately, however we lose information especially if there is insufficient data for some districts. But we get a more detailed model per district.

The second option is to combine all the data and estimate the water quality of the state as a whole, i.e. a pooled model. We have more data but we lose granular information about each district.

The hierarchical model combines both of these options, by sharing information between the districts using hyperpriors that are priors over the parameter priors. In other words, instead of setting the prior parameters (or hyperparameters) to a constant value, we draw it from another prior distribution called the hyperprior. This hyperprior is shared among all the districts, and as a result information is shared between all the groups in the data.

Problem Statement¶

We measure the water samples for three districts, and we collect 30 samples for each district. The data is simply a binary value that indicates whether the water is contaminated or not. We count the number of samples that have contamination below the acceptable levels. We generate three arrays:

N_samples - The total number of samples collected for each district or group

G_samples - The number of good samples or samples with contamination levels below a certain threshold

group_idx - The id for each district or group

Artifically generate the data¶

N_samples = [30, 30, 30] # Total number of samples collected

G_samples = [18, 18, 18] # Number of samples with water contamination

# below accepted levels

# Create an ID for each of the 30 + 30 + 30 samples - 0,1,2 to indicate that they

# belong to different groups

group_idx = np.repeat(np.arange(len(N_samples)), N_samples)

data = []

for i in range(0, len(N_samples)):

data.extend(np.repeat([1, 0], [G_samples[i], N_samples[i]-G_samples[i]]))

# ID per sample

group_idx

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2])

data

[1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0]

The Sampling Model¶

The scenario presented here is essentially a binary classification problem that can be modeled using a Bernoulli distribution. The parameter of the Bernoulli distribution is a vector corresponding to each group (\(\theta_1, \theta_2, \theta_3\)) and indicates the probability of getting a good sample (in each group). Since this is a hierarchical model, each group shares information and as a result the parameter of Group 1 can be influenced by the samples in Group 2 and 3. This is what makes hierarchical modeling so powerful.

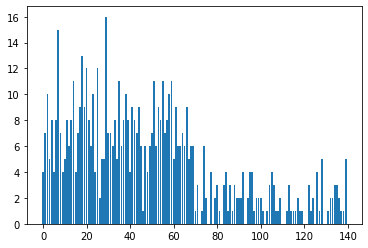

The process of generating our samples looks like the following. If we start from the last equation and work our way up, we can see that \(\theta_i\) and \(y_i\) are similar to a pooled model except that the beta prior takes parameters \(\alpha\) and \(\beta\) instead of constant values. These parameters now have hyperpriors applied to them using the parameters \(\mu\) and k which are assumed to be distributed using a beta distribution and a half-Normal distribution respectively. Note that \(\alpha\) and \(\beta\) are indirectly computed from the terms \(\mu\) and k here. \(\mu\) affects the mean of the beta distribution and increasing k makes the beta distribution more concentrated. This parameterization is more efficient than the direct parameterization in terms of \(\alpha_i\) and \(\beta_i\).

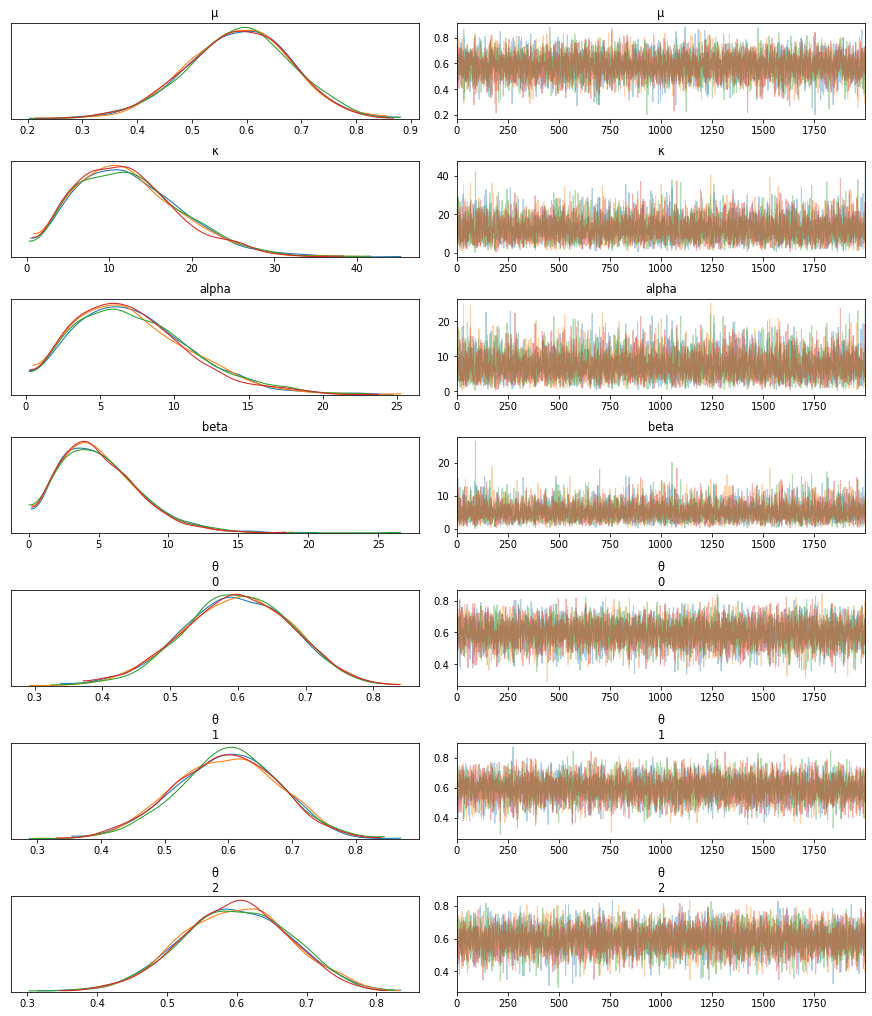

def get_hyperprior_model(data, N_samples, group_idx):

with pm.Model() as model_h:

μ = pm.Beta('μ', 1., 1.) # hyperprior

κ = pm.HalfNormal('κ', 10) # hyperprior

alpha = pm.Deterministic('alpha', μ*κ)

beta = pm.Deterministic('beta', (1.0-μ)*κ)

θ = pm.Beta('θ', alpha=alpha, beta=beta, shape=len(N_samples)) # prior, len(N_samples) = 3

y = pm.Bernoulli('y', p=θ[group_idx], observed=data)

trace_h = pm.sample(2000)

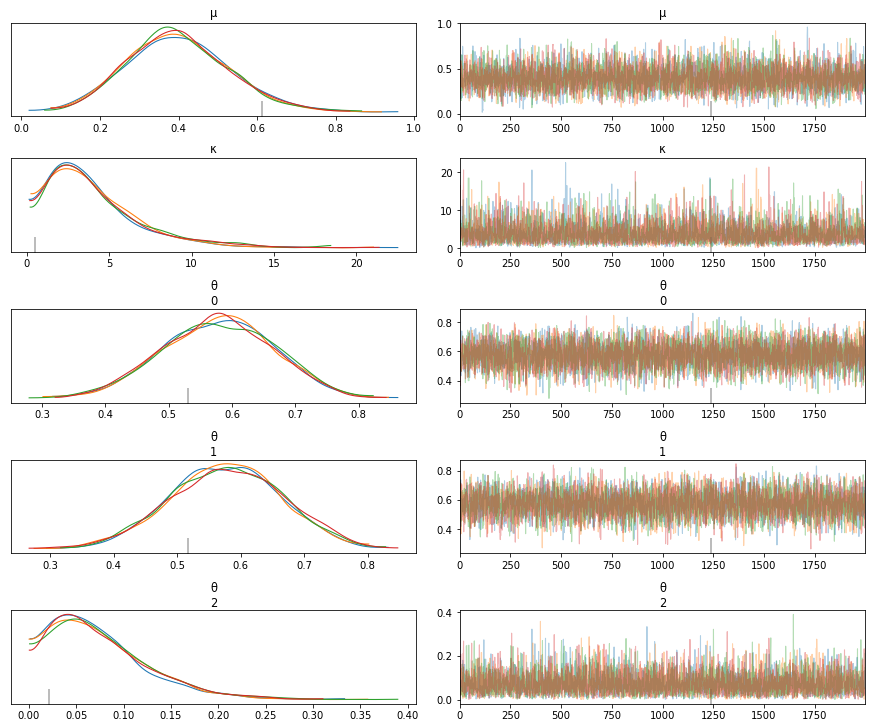

az.plot_trace(trace_h)

print(az.summary(trace_h))

return(model_h)

model = get_hyperprior_model(data, N_samples, group_idx)

pm.model_to_graphviz(model)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [θ, κ, μ]

Sampling 4 chains, 0 divergences: 100%|██████████| 10000/10000 [00:15<00:00, 657.97draws/s]

mean sd hpd_3% hpd_97% mcse_mean mcse_sd ess_mean ess_sd \

μ 0.583 0.095 0.412 0.766 0.001 0.001 5915.0 5915.0

κ 12.412 6.265 1.931 23.805 0.084 0.059 5625.0 5625.0

alpha 7.284 3.905 0.716 14.192 0.052 0.037 5633.0 5633.0

beta 5.128 2.793 0.662 10.095 0.037 0.026 5603.0 5603.0

θ[0] 0.598 0.080 0.445 0.742 0.001 0.001 6702.0 6567.0

θ[1] 0.597 0.080 0.445 0.743 0.001 0.001 6390.0 6330.0

θ[2] 0.597 0.078 0.453 0.743 0.001 0.001 6441.0 6325.0

ess_bulk ess_tail r_hat

μ 5940.0 5481.0 1.0

κ 5070.0 4299.0 1.0

alpha 4991.0 4178.0 1.0

beta 5097.0 4733.0 1.0

θ[0] 6649.0 5038.0 1.0

θ[1] 6359.0 5490.0 1.0

θ[2] 6413.0 5425.0 1.0

Shrinkage¶

Shrinkage refers to the phenomenon of sharing information among the groups through the use of hyperpriors. Hierarchical models can therefore be considered partially pooled models since information is shared among the groups so we move away from extreme values for the inferred parameters. This is good for two scenarios:

If we have outliers (or poor quality data) in our data groups.

If we do not have a lot of data.

In hierarchical models, the groups are neither independent (unpooled) nor do we clump all the data together (pooled) without accounting for the differences in the groups.

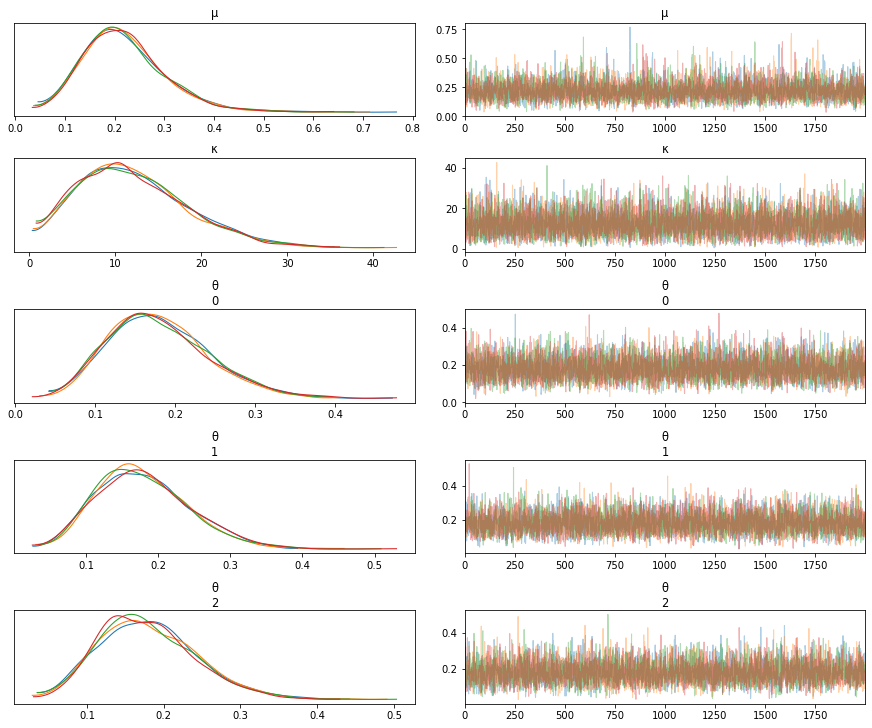

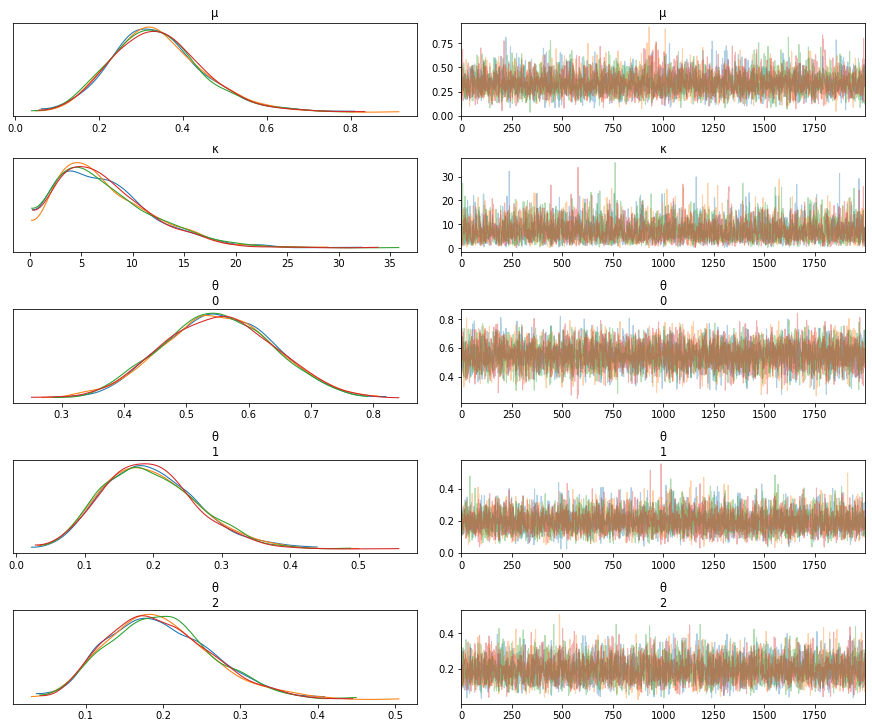

We can look at three cases below as examples to illustrate the benefits of a hierarchical model. We keep the total number of samples and groups the same as before, however we vary the number of good samples in each group. When there are significant differences in the number of good samples within the groups, the behavior is different from what we see in an independent model. Averages win and extreme values are avoided.

The values of G_samples are changed to have the following values

[5,5,5]

[18,5,5]

[18,18,1]

Note how the values of the three \(\theta\)s change as we change the values of G_samples.

# Case 1

N_samples = [30, 30, 30] # Total number of samples collected

G_samples = [5, 5, 5] # Number of samples with water contamination

# below accepted levels

# Create an id for each of the 30 + 30 + 30 samples - 0,1,2 to indicate that they

# belong to different groups

group_idx = np.repeat(np.arange(len(N_samples)), N_samples)

data = []

for i in range(0, len(N_samples)):

data.extend(np.repeat([1, 0], [G_samples[i], N_samples[i]-G_samples[i]]))

model = get_hyperprior_model(data, N_samples, group_idx)

model

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [θ, κ, μ]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 13 seconds.

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

mean sd hdi_3% hdi_97% mcse_mean mcse_sd ess_mean ess_sd \

μ 0.219 0.083 0.077 0.371 0.001 0.001 4711.0 4129.0

κ 12.114 6.257 1.954 23.998 0.080 0.056 6145.0 6145.0

θ[0] 0.179 0.062 0.072 0.297 0.001 0.001 6626.0 6626.0

θ[1] 0.178 0.063 0.070 0.296 0.001 0.001 6208.0 6196.0

θ[2] 0.179 0.062 0.073 0.301 0.001 0.001 6149.0 6149.0

ess_bulk ess_tail r_hat

μ 5071.0 4573.0 1.0

κ 5449.0 4138.0 1.0

θ[0] 6257.0 5240.0 1.0

θ[1] 6058.0 5071.0 1.0

θ[2] 5894.0 4777.0 1.0

# Case 2 - The value of theta_1 is now smaller compared to our original case

N_samples = [30, 30, 30] # Total number of samples collected

G_samples = [18, 5, 5] # Number of samples with water contamination

# below accepted levels

# Create an id for each of the 30 + 30 + 30 samples - 0,1,2 to indicate that they

# belong to different groups

group_idx = np.repeat(np.arange(len(N_samples)), N_samples)

data = []

for i in range(0, len(N_samples)):

data.extend(np.repeat([1, 0], [G_samples[i], N_samples[i]-G_samples[i]]))

get_hyperprior_model(data, N_samples, group_idx)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [θ, κ, μ]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 12 seconds.

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

mean sd hdi_3% hdi_97% mcse_mean mcse_sd ess_mean ess_sd \

μ 0.340 0.110 0.130 0.534 0.002 0.001 5276.0 4919.0

κ 7.385 4.552 0.755 15.699 0.069 0.049 4299.0 4299.0

θ[0] 0.550 0.088 0.389 0.714 0.001 0.001 5232.0 5213.0

θ[1] 0.196 0.068 0.072 0.320 0.001 0.001 5489.0 5489.0

θ[2] 0.196 0.067 0.076 0.321 0.001 0.001 4750.0 4750.0

ess_bulk ess_tail r_hat

μ 5436.0 5137.0 1.0

κ 4053.0 4706.0 1.0

θ[0] 5227.0 5154.0 1.0

θ[1] 5250.0 4633.0 1.0

θ[2] 4585.0 4605.0 1.0

# Case 3 - Value of theta_3 is not as small as it would have been if it were estimated individually

N_samples = [30, 30, 30] # Total number of samples collected

G_samples = [18, 18, 1] # Number of samples with water contamination

# below accepted levels

# Create an id for each of the 30 + 30 + 30 samples - 0,1,2 to indicate that they

# belong to different groups

group_idx = np.repeat(np.arange(len(N_samples)), N_samples)

data = []

for i in range(0, len(N_samples)):

data.extend(np.repeat([1, 0], [G_samples[i], N_samples[i]-G_samples[i]]))

get_hyperprior_model(data, N_samples, group_idx)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [θ, κ, μ]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 12 seconds.

There was 1 divergence after tuning. Increase `target_accept` or reparameterize.

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

/Users/srijith.rajamohan/opt/anaconda3/envs/pymc3/lib/python3.8/site-packages/arviz/data/io_pymc3.py:85: FutureWarning: Using `from_pymc3` without the model will be deprecated in a future release. Not using the model will return less accurate and less useful results. Make sure you use the model argument or call from_pymc3 within a model context.

warnings.warn(

mean sd hdi_3% hdi_97% mcse_mean mcse_sd ess_mean ess_sd \

μ 0.398 0.129 0.156 0.638 0.002 0.001 5504.0 5218.0

κ 4.058 2.846 0.280 9.217 0.044 0.034 4167.0 3573.0

θ[0] 0.577 0.087 0.419 0.740 0.001 0.001 6018.0 6005.0

θ[1] 0.576 0.087 0.416 0.738 0.001 0.001 5558.0 5450.0

θ[2] 0.075 0.051 0.001 0.165 0.001 0.001 5150.0 4729.0

ess_bulk ess_tail r_hat

μ 5575.0 5216.0 1.0

κ 4547.0 3757.0 1.0

θ[0] 6013.0 5153.0 1.0

θ[1] 5535.0 4996.0 1.0

θ[2] 5056.0 4368.0 1.0

GRADED EVALUATION (18 mins)¶

According to the Central Limit Theorem, the mean of the sample means tends to the true population mean as the number of samples increase

a. True

b. False

Many real-world phenomena are averages of various factors, hence it is reasonable to use a Gaussian distribution to model them

a. True

b. False

What type of distribution is better suited to modeling positive values?

a. Normal

b. Half-normal

Posterior predictive checks can be used to verify that the inferred distribution is similar to the observed data

a. True

b. False

Which distribution is better suited to model data that has a lot of outliers?

a. Gaussian distribution

b. Student’s t-distribution

Hierarchical models are beneficial in modeling data from groups where there might be limited data in certain groups

a. True

b. False

Hierarchical models share information through hyperpriors

a. True

b. False

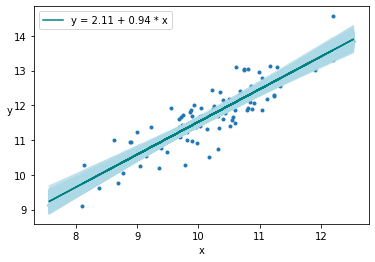

Linear Regression Again!¶

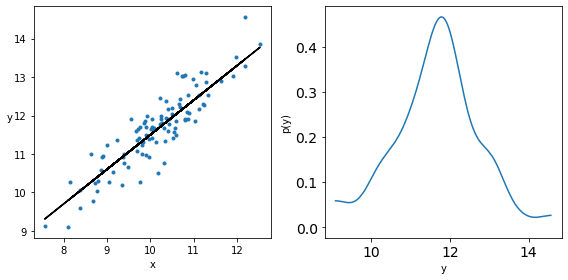

Let us generate some data for linear regression and plot it along with its density.

np.random.seed(1)

N = 100

# Parameters

alpha_real = 2.5

beta_real = 0.9

eps_real = np.random.normal(0, 0.5, size=N)

# Input data drawn from a Normal distribution

x = np.random.normal(10, 1, N)

# Output generated from the input and the parameters

y_real = alpha_real + beta_real * x

# Add random noise to y

y = y_real + eps_real

# Plot the data

_, ax = plt.subplots(1,2, figsize=(8, 4))

ax[0].plot(x, y, 'C0.')

ax[0].set_xlabel('x')

ax[0].set_ylabel('y', rotation=0)

ax[0].plot(x, y_real, 'k')

az.plot_kde(y, ax=ax[1])

ax[1].set_xlabel('y')

ax[1].set_ylabel('p(y)')

plt.tight_layout()

Inference of Parameters in Linear Regression¶

import pymc3 as pm

with pm.Model() as model_g:

α = pm.Normal('α', mu=0, sd=10)

β = pm.Normal('β', mu=0, sd=1)

ϵ = pm.HalfCauchy('ϵ', 5) # Try changing this to a half normal, half cauchy has fatter tails

μ = pm.Deterministic('μ', α + β * x)

y_pred = pm.Normal('y_pred', mu=μ, sd=ϵ, observed=y)

trace_g = pm.sample(2000, tune=1000)

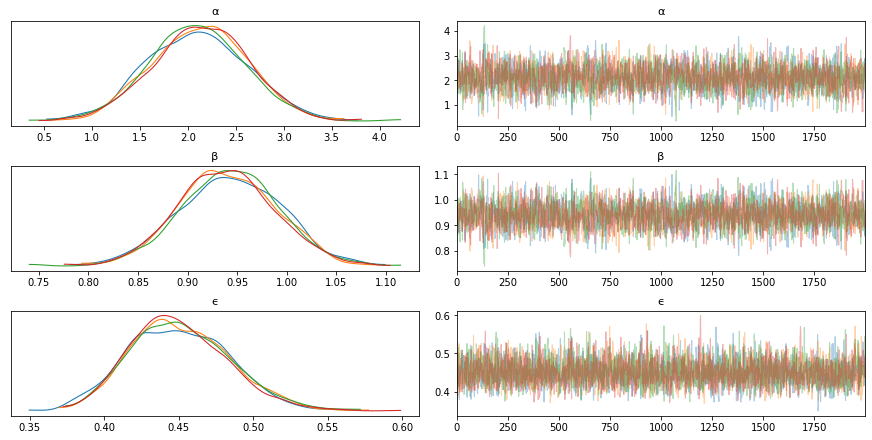

az.plot_trace(trace_g, var_names=['α', 'β', 'ϵ']) # if you have a lot of variables, explicitly specify

plt.figure()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ϵ, β, α]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 33 seconds.

<Figure size 432x288 with 0 Axes>

<Figure size 432x288 with 0 Axes>

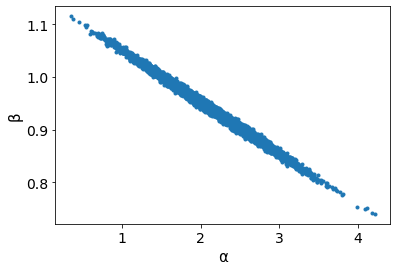

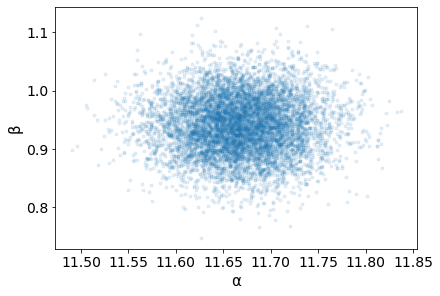

Parameter Correlations¶

# Pairplot

az.plot_pair(trace_g, var_names=['α', 'β'], plot_kwargs={'alpha': 0.1}) # Notice the diagonal shape

<AxesSubplot:xlabel='α', ylabel='β'>

Visualize the Uncertainty¶

plt.figure()

# Plot the true values

plt.plot(x, y, 'C0.')

# Get the mean inferred values

alpha_m = trace_g['α'].mean()

beta_m = trace_g['β'].mean()

# Plot all draws to show the variance of the regression lines

draws = range(0, len(trace_g['α']), 10)

plt.plot(x, trace_g['α'][draws] + trace_g['β'][draws]* x[:, np.newaxis], c='lightblue', alpha=0.5)

# Plot the mean regression line

plt.plot(x, alpha_m + beta_m * x, c='teal', label=f'y = {alpha_m:.2f} + {beta_m:.2f} * x')

plt.xlabel('x')

plt.ylabel('y', rotation=0)

plt.legend()

<matplotlib.legend.Legend at 0x7fbd6b3a2d00>

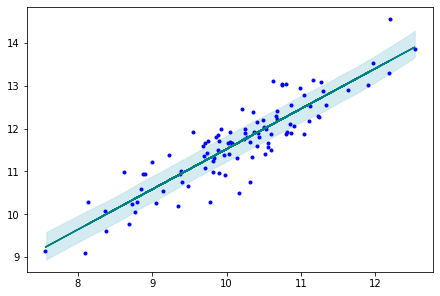

Posterior Sampling¶

ppc = pm.sample_posterior_predictive(trace_g,

samples=2000,

model=model_g)

# Plot the posterior predicted samples, i.e. these are samples of predicted y for each original x in our data

az.plot_hpd(x, ppc['y_pred'], credible_interval=0.5, color='lightblue')

# Plot the true y values

plt.plot(x, y, 'b.')

# Plot the mean regression line - from cell above

plt.plot(x, alpha_m + beta_m * x, c='teal')

[<matplotlib.lines.Line2D at 0x7fbd6bcf63d0>]

Mean-center the Data¶

Looking at the pairplot of \(\alpha\) and \(\beta\), one can notice the high degree of correlation between these two variables as indicated by the narrow joint density. This results in a parameter posterior space that is diagonally shaped, which is problematic for many samplers such as the Metropolis-Hastings MCMC sampler. One recommended approach to minimize this correlation is to center the independent variables. If \(\bar{x}\) is the mean of the data x then

The advantage of this is twofold:

The pivot point is the intercept when the slope changes

The parameter posterior space is more circular

Transformation¶

In order to center the data, the original equation for linear regression given by

has to be equivalent to the equation for the centered data

Recovering the data¶

This implies that we can recover the original intercept \(\alpha\) as

and \(\beta\) as

Standardize the data¶

You can also standardize the data by mean centering and dividing by the standard deviation

Mean Centered - Broader Sampling Space¶

# Center the data

x_centered = x - x.mean()

with pm.Model() as model_g:

α = pm.Normal('α', mu=0, sd=10)

β = pm.Normal('β', mu=0, sd=1)

ϵ = pm.HalfCauchy('ϵ', 5)

μ = pm.Deterministic('μ', α + β * x_centered)

y_pred = pm.Normal('y_pred', mu=μ, sd=ϵ, observed=y)

α_recovered = pm.Deterministic('α_recovered', α - β * x.mean())

trace_g = pm.sample(2000, tune=1000)

az.plot_trace(trace_g, var_names=['α', 'β', 'ϵ'])

plt.figure()

az.plot_pair(trace_g, var_names=['α', 'β'], plot_kwargs={'alpha': 0.1})

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ϵ, β, α]

Sampling 4 chains, 0 divergences: 100%|██████████| 12000/12000 [00:05<00:00, 2088.47draws/s]

<matplotlib.axes._subplots.AxesSubplot at 0x7fc2f92fda90>

<Figure size 432x288 with 0 Axes>

Robust Linear Regression¶

We fitted our model parameters by assuming the data likelihood was a Normal distribution, however as we saw earlier this assumption suffers from not doing well with outliers. Our solution to this problem is the same, use a Student’s t-distribution for the likelihood.

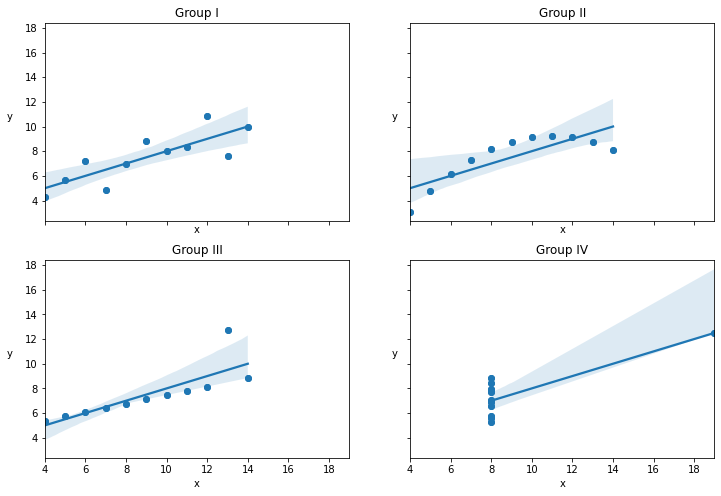

Here we look at the Anscombe’s quartet, which is a set of 4 data sets. They have similar statistical properties even though they look very different and were used to illustrate the need to visualize the data along with the effect of outliers. Our intended goal is the same, to model data with outliers and assess the sensitivity of the model to these outliers.

import seaborn as sns

from scipy import stats

# Load the example dataset for Anscombe's quartet

df = sns.load_dataset("anscombe")

df

| dataset | x | y | |

|---|---|---|---|

| 0 | I | 10.0 | 8.04 |

| 1 | I | 8.0 | 6.95 |

| 2 | I | 13.0 | 7.58 |

| 3 | I | 9.0 | 8.81 |

| 4 | I | 11.0 | 8.33 |

| 5 | I | 14.0 | 9.96 |

| 6 | I | 6.0 | 7.24 |

| 7 | I | 4.0 | 4.26 |

| 8 | I | 12.0 | 10.84 |

| 9 | I | 7.0 | 4.82 |

| 10 | I | 5.0 | 5.68 |

| 11 | II | 10.0 | 9.14 |

| 12 | II | 8.0 | 8.14 |

| 13 | II | 13.0 | 8.74 |

| 14 | II | 9.0 | 8.77 |

| 15 | II | 11.0 | 9.26 |

| 16 | II | 14.0 | 8.10 |

| 17 | II | 6.0 | 6.13 |

| 18 | II | 4.0 | 3.10 |

| 19 | II | 12.0 | 9.13 |

| 20 | II | 7.0 | 7.26 |

| 21 | II | 5.0 | 4.74 |

| 22 | III | 10.0 | 7.46 |

| 23 | III | 8.0 | 6.77 |

| 24 | III | 13.0 | 12.74 |

| 25 | III | 9.0 | 7.11 |

| 26 | III | 11.0 | 7.81 |

| 27 | III | 14.0 | 8.84 |

| 28 | III | 6.0 | 6.08 |

| 29 | III | 4.0 | 5.39 |

| 30 | III | 12.0 | 8.15 |

| 31 | III | 7.0 | 6.42 |

| 32 | III | 5.0 | 5.73 |

| 33 | IV | 8.0 | 6.58 |

| 34 | IV | 8.0 | 5.76 |

| 35 | IV | 8.0 | 7.71 |

| 36 | IV | 8.0 | 8.84 |

| 37 | IV | 8.0 | 8.47 |

| 38 | IV | 8.0 | 7.04 |

| 39 | IV | 8.0 | 5.25 |

| 40 | IV | 19.0 | 12.50 |

| 41 | IV | 8.0 | 5.56 |

| 42 | IV | 8.0 | 7.91 |

| 43 | IV | 8.0 | 6.89 |

Plot the 4 Subgroups in the Data¶

x_0 = df[df.dataset == 'I']['x'].values

y_0 = df[df.dataset == 'I']['y'].values

x_1 = df[df.dataset == 'II']['x'].values

y_1 = df[df.dataset == 'II']['y'].values

x_2 = df[df.dataset == 'III']['x'].values

y_2 = df[df.dataset == 'III']['y'].values

x_3 = df[df.dataset == 'IV']['x'].values

y_3 = df[df.dataset == 'IV']['y'].values

_, ax = plt.subplots(2, 2, figsize=(12,8), sharex=True, sharey=True)

print("Mean of x values in all groups -- ",x_0.mean(), x_1.mean(), x_2.mean(), x_3.mean())

print("Mean of y values in all groups -- ",y_0.mean(), y_1.mean(), y_2.mean(), y_3.mean())

print("Mean of x values in all groups -- ",x_0.var(), x_1.var(), x_2.var(), x_3.var())

print("Mean of y values in all groups -- ",y_0.var(), y_1.var(), y_2.var(), y_3.var())

ax = np.ravel(ax)

ax[0].scatter(x_0, y_0)

sns.regplot(x_0, y_0, ax=ax[0])

ax[0].set_title('Group I')

ax[0].set_xlabel('x')

ax[0].set_ylabel('y', rotation=0, labelpad=15)

ax[1].scatter(x_1, y_1)

sns.regplot(x_1, y_1, ax=ax[1])

ax[1].set_title('Group II')

ax[1].set_xlabel('x')

ax[1].set_ylabel('y', rotation=0, labelpad=15)

ax[2].scatter(x_2, y_2)

sns.regplot(x_2, y_2, ax=ax[2])

ax[2].set_title('Group III')

ax[2].set_xlabel('x')

ax[2].set_ylabel('y', rotation=0, labelpad=15)

ax[3].scatter(x_3, y_3)

sns.regplot(x_3, y_3, ax=ax[3])

ax[3].set_title('Group IV')

ax[3].set_xlabel('x')

ax[3].set_ylabel('y', rotation=0, labelpad=15)

Mean of x values in all groups -- 9.0 9.0 9.0 9.0

Mean of y values in all groups -- 7.500909090909093 7.50090909090909 7.5 7.500909090909091

Mean of x values in all groups -- 10.0 10.0 10.0 10.0

Mean of y values in all groups -- 3.7520628099173554 3.752390082644628 3.747836363636364 3.7484082644628103

Text(0, 0.5, 'y')

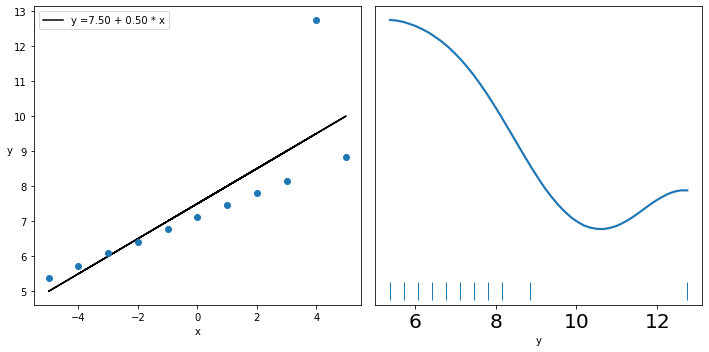

Plot Data Group 3 and its Kernel Density¶

x_2 = x_2 - x_2.mean()

_, ax = plt.subplots(1, 2, figsize=(10, 5))

beta_c, alpha_c = stats.linregress(x_2, y_2)[:2]

ax[0].plot(x_2, (alpha_c + beta_c * x_2), 'k',

label=f'y ={alpha_c:.2f} + {beta_c:.2f} * x')

ax[0].plot(x_2, y_2, 'C0o')

ax[0].set_xlabel('x')

ax[0].set_ylabel('y', rotation=0)

ax[0].legend(loc=0)

az.plot_kde(y_2, ax=ax[1], rug=True)

ax[1].set_xlabel('y')

ax[1].set_yticks([])

plt.tight_layout()

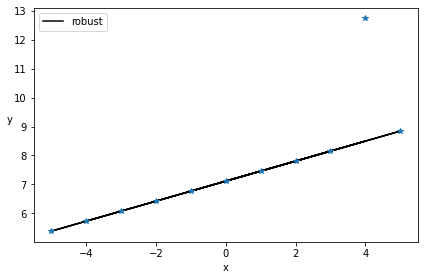

Model using a Student’s t Distribution¶

with pm.Model() as model_t:

α = pm.Normal('α', mu=y_2.mean(), sd=1)

β = pm.Normal('β', mu=0, sd=1)

ϵ = pm.HalfNormal('ϵ', 5)

ν_ = pm.Exponential('ν_', 1/29)

ν = pm.Deterministic('ν', ν_ + 1) # shifting the exponential to avoid values close to 0

y_pred = pm.StudentT('y_pred', mu=α + β * x_2,

sd=ϵ, nu=ν, observed=y_2)

trace_t = pm.sample(2000)

alpha_m = trace_t['α'].mean()

beta_m = trace_t['β'].mean()

plt.plot(x_2, alpha_m + beta_m * x_2, c='k', label='robust')

plt.plot(x_2, y_2, '*')

plt.xlabel('x')

plt.ylabel('y', rotation=0)

plt.legend(loc=2)

plt.tight_layout()

pm.model_to_graphviz(model_t)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ν_, ϵ, β, α]

Sampling 4 chains, 0 divergences: 100%|██████████| 10000/10000 [00:08<00:00, 1229.46draws/s]

The number of effective samples is smaller than 25% for some parameters.

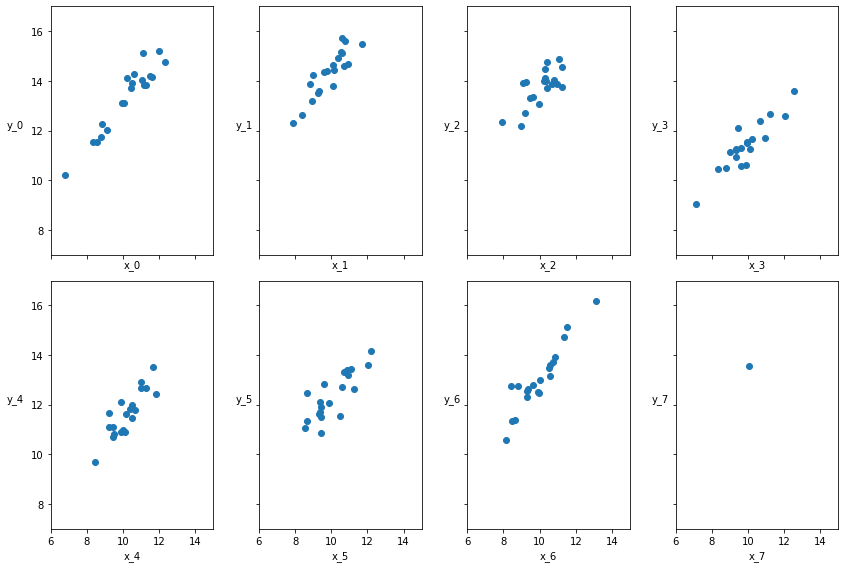

Hierarchical Linear Regression¶

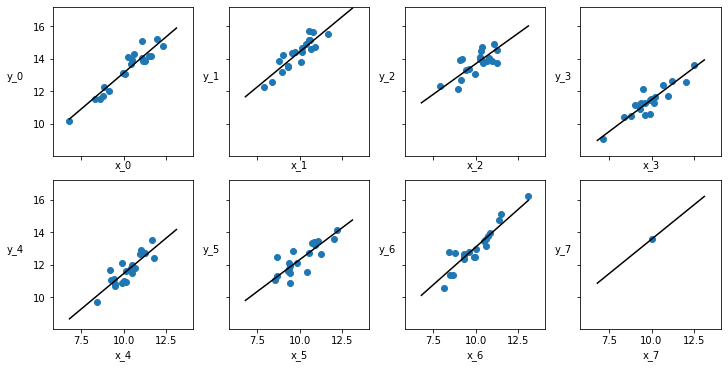

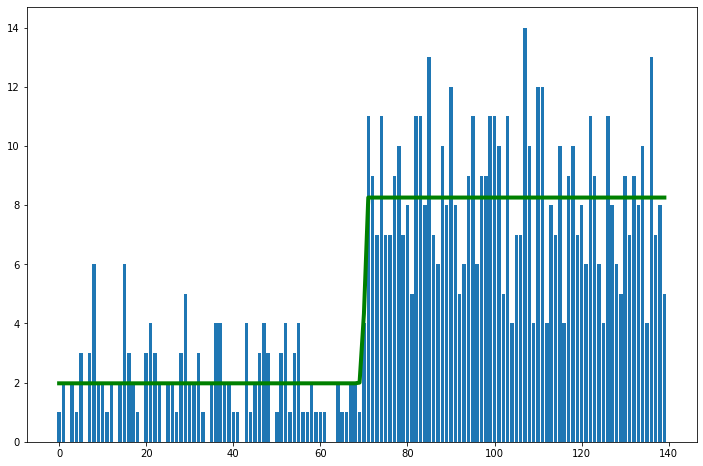

We want to use the same hierarchical or multilevel modeling technique that we discussed earlier, for linear regression problems as well. As mentioned above, this is particularly useful when presented with imbalanced subgroups of sparse data. In this example, we create data with 8 subgroups. In this data, 7 of the subgroups have 20 data points and the last one has a single data point.

The data for all the 8 groups are generated from a normal distribution of mean 10 and a standard deviation of 1. The parameters for the linear model are generated from the normal and beta distributions.

Data Generation¶

N = 20

M = 8

idx = np.repeat(range(M-1), N)

idx = np.append(idx, 7)

np.random.seed(314)

alpha_real = np.random.normal(4, 1, size=M)

beta_real = np.random.beta(7, 1, size=M)

eps_real = np.random.normal(0, 0.5, size=len(idx))

print("Alpha parameters ", alpha_real )

y_m = np.zeros(len(idx))

x_m = np.random.normal(10, 1, len(idx))

y_m = alpha_real[idx] + beta_real[idx] * x_m + eps_real

_, ax = plt.subplots(2, 4, figsize=(12,8), sharex=True, sharey=True)

ax = np.ravel(ax)

j, k = 0, N

for i in range(M):

ax[i].scatter(x_m[j:k], y_m[j:k])

ax[i].set_xlabel(f'x_{i}')

ax[i].set_ylabel(f'y_{i}', rotation=0, labelpad=15)

ax[i].set_xlim(6, 15)

ax[i].set_ylim(7, 17)

j += N

k += N

plt.tight_layout()

Alpha parameters [4.16608544 4.78196448 4.85228509 3.29292904 3.06834281 4.88666088

3.77821034 4.38172358]

Non-hierarchical Model¶

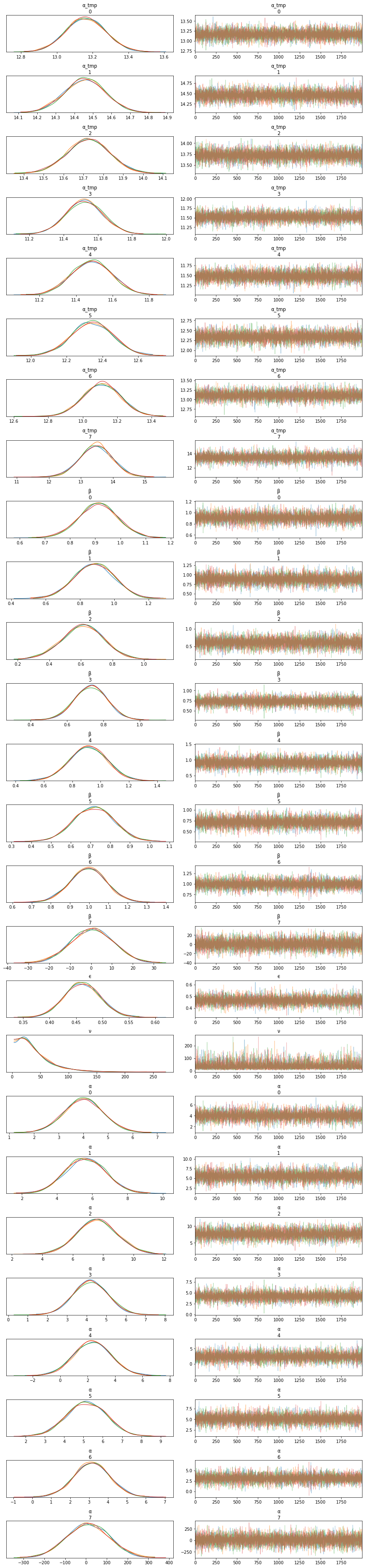

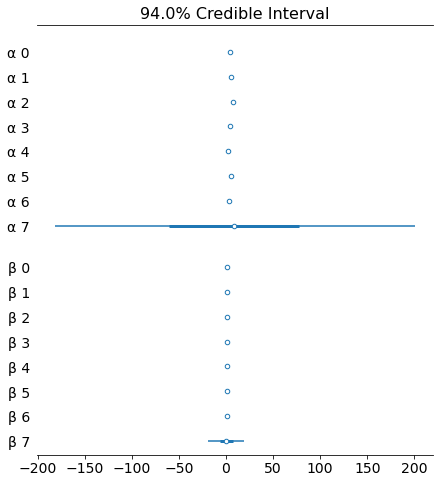

We build a non-hierarchical model first for comparison. We also mean-center the data for ease of convergence. Note how the obtained \(\alpha\) and \(\beta\) values vary for each group, particularly the scale of the last one, which is really off.

# Center the data

x_centered = x_m - x_m.mean()

with pm.Model() as unpooled_model:

# Note the M prior parameters for the M groups

α_tmp = pm.Normal('α_tmp', mu=2, sd=5, shape=M)

β = pm.Normal('β', mu=0, sd=10, shape=M)

ϵ = pm.HalfCauchy('ϵ', 5)

ν = pm.Exponential('ν', 1/30)

y_pred = pm.StudentT('y_pred', mu=α_tmp[idx] + β[idx] * x_centered, sd=ϵ, nu=ν, observed=y_m)

# Rescale alpha back - after x had been centered the computed alpha is different from the original alpha

α = pm.Deterministic('α', α_tmp - β * x_m.mean())

trace_up = pm.sample(2000)

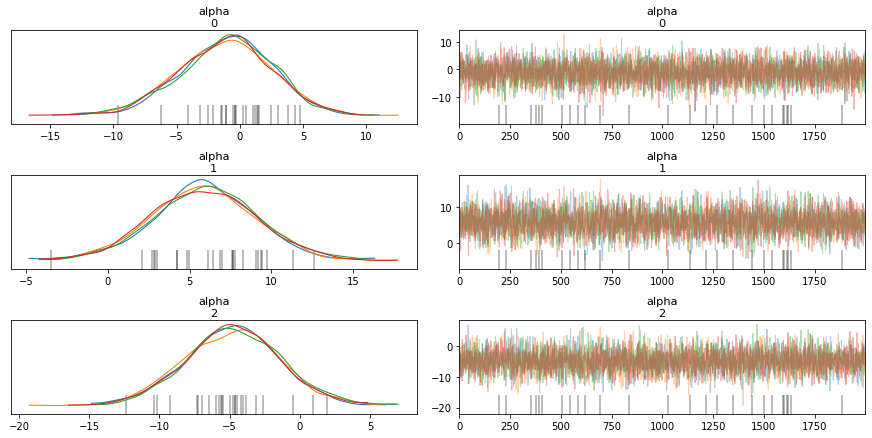

az.plot_trace(trace_up)

plt.figure()

az.plot_forest(trace_up, var_names=['α', 'β'], combined=True)

az.summary(trace_up)

pm.model_to_graphviz(unpooled_model)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ν, ϵ, β, α_tmp]

Sampling 4 chains, 0 divergences: 100%|██████████| 10000/10000 [00:17<00:00, 584.40draws/s]

<Figure size 432x288 with 0 Axes>

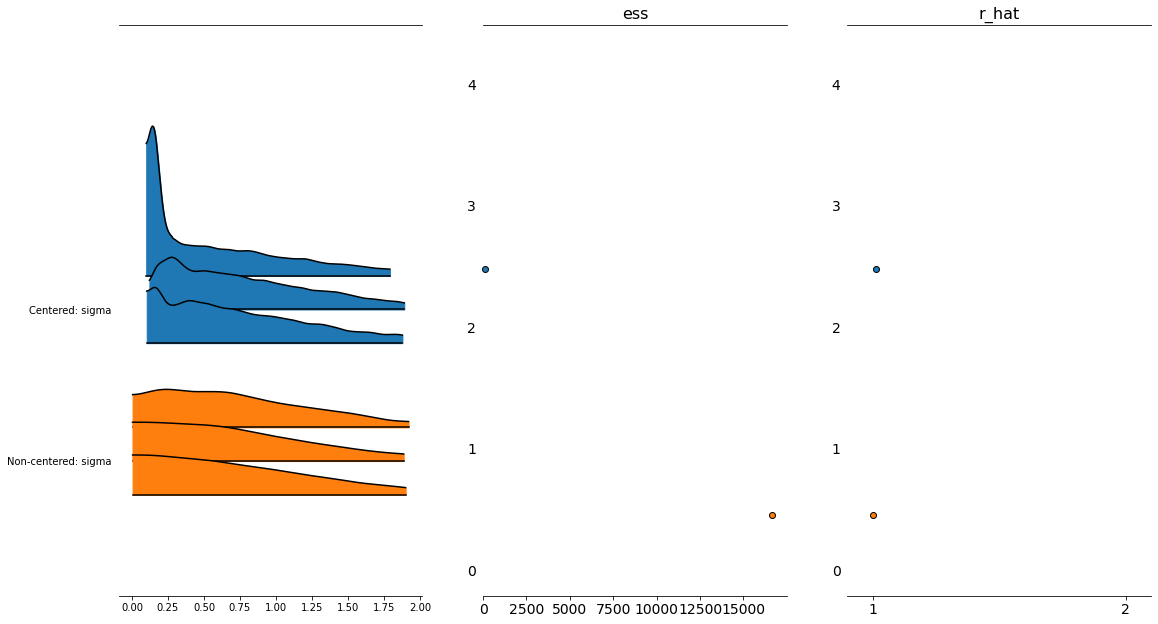

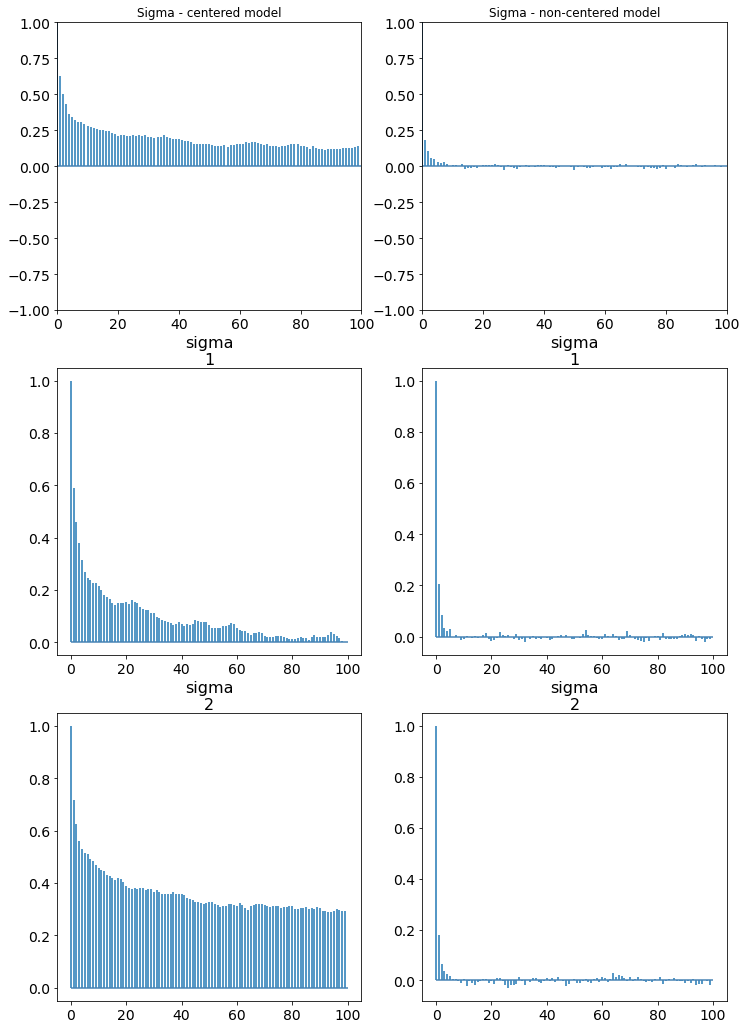

Hierarchical Model¶

We set hyperpriors on the \(\alpha\) and \(\beta\) parameters. To be more precise, the hyperpriors are applied to the scaled version of \(\alpha\), i.e. \(\alpha_{tmp}\).

with pm.Model() as hierarchical_model:

# Hyperpriors - we add these instead of setting the prior values to a constant

# Note that there exists only one hyperprior for all M groups, shared hyperprior

α_μ_tmp = pm.Normal('α_μ_tmp', mu=100, sd=1) # try changing these hyperparameters

α_σ_tmp = pm.HalfNormal('α_σ_tmp', 10) # try changing these hyperparameters

β_μ = pm.Normal('β_μ', mu=10, sd=2) # reasonable changes do not have an impact

β_σ = pm.HalfNormal('β_σ', sd=5)

# priors - note that the prior parameters are no longer a constant

α_tmp = pm.Normal('α_tmp', mu=α_μ_tmp, sd=α_σ_tmp, shape=M)

β = pm.Normal('β', mu=β_μ, sd=β_σ, shape=M)

ϵ = pm.HalfCauchy('ϵ', 5)

ν = pm.Exponential('ν', 1/30)

y_pred = pm.StudentT('y_pred',

mu=α_tmp[idx] + β[idx] * x_centered,

sd=ϵ, nu=ν, observed=y_m)

α = pm.Deterministic('α', α_tmp - β * x_m.mean())

α_μ = pm.Deterministic('α_μ', α_μ_tmp - β_μ *

x_m.mean())

α_σ = pm.Deterministic('α_sd', α_σ_tmp - β_μ * x_m.mean())

trace_hm = pm.sample(1000)

az.plot_forest(trace_hm, var_names=['α', 'β'], combined=True)

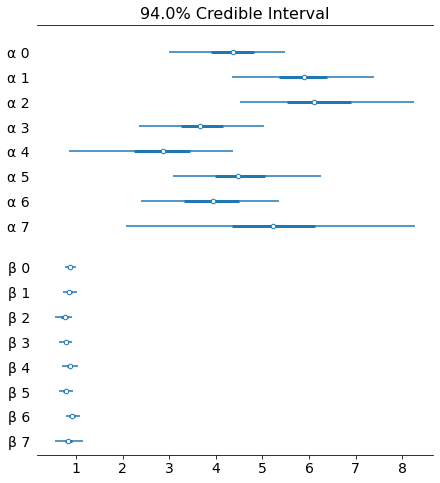

_, ax = plt.subplots(2, 4, figsize=(10, 5), sharex=True, sharey=True,

constrained_layout=True)

ax = np.ravel(ax)

j, k = 0, N

x_range = np.linspace(x_m.min(), x_m.max(), 10)

for i in range(M):

ax[i].scatter(x_m[j:k], y_m[j:k])

ax[i].set_xlabel(f'x_{i}')

ax[i].set_ylabel(f'y_{i}', labelpad=17, rotation=0)

alpha_m = trace_hm['α'][:, i].mean()

beta_m = trace_hm['β'][:, i].mean()

ax[i].plot(x_range, alpha_m + beta_m * x_range, c='k',

label=f'y = {alpha_m:.2f} + {beta_m:.2f} * x')

plt.xlim(x_m.min()-1, x_m.max()+1)

plt.ylim(y_m.min()-1, y_m.max()+1)

j += N

k += N

pm.model_to_graphviz(hierarchical_model)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ν, ϵ, β, α_tmp, β_σ, β_μ, α_σ_tmp, α_μ_tmp]

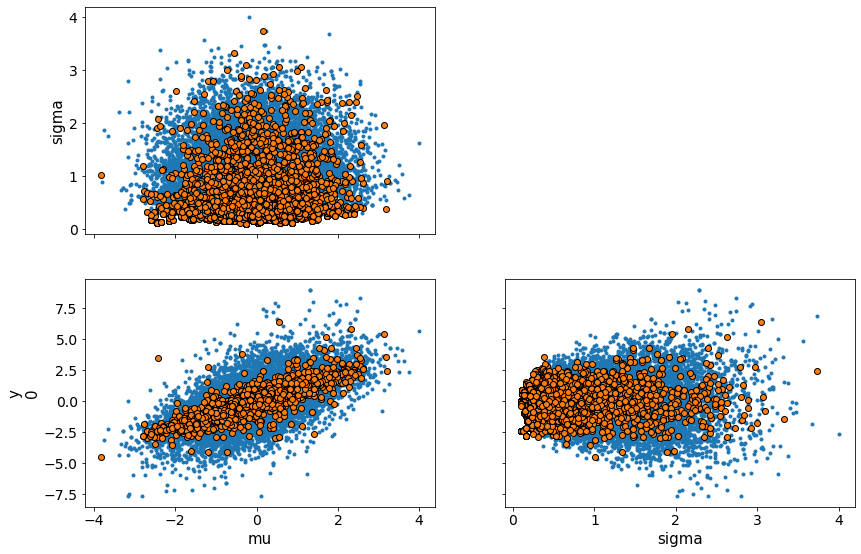

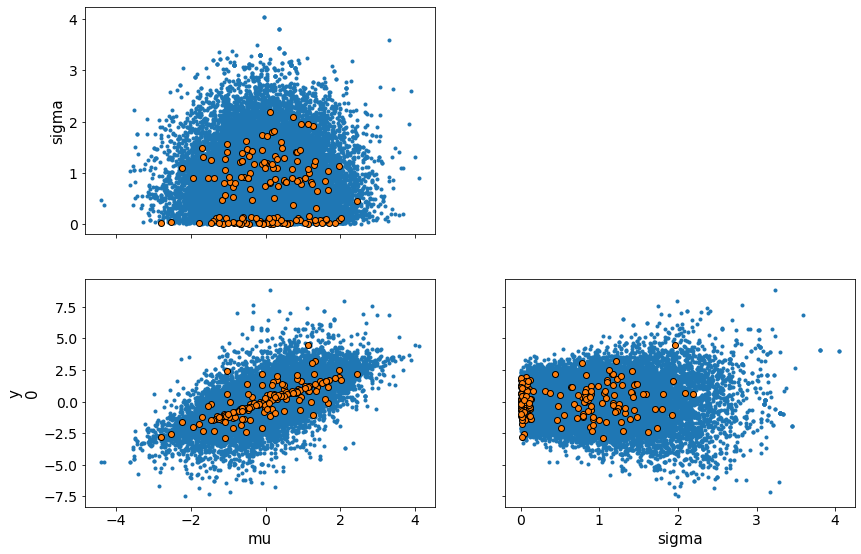

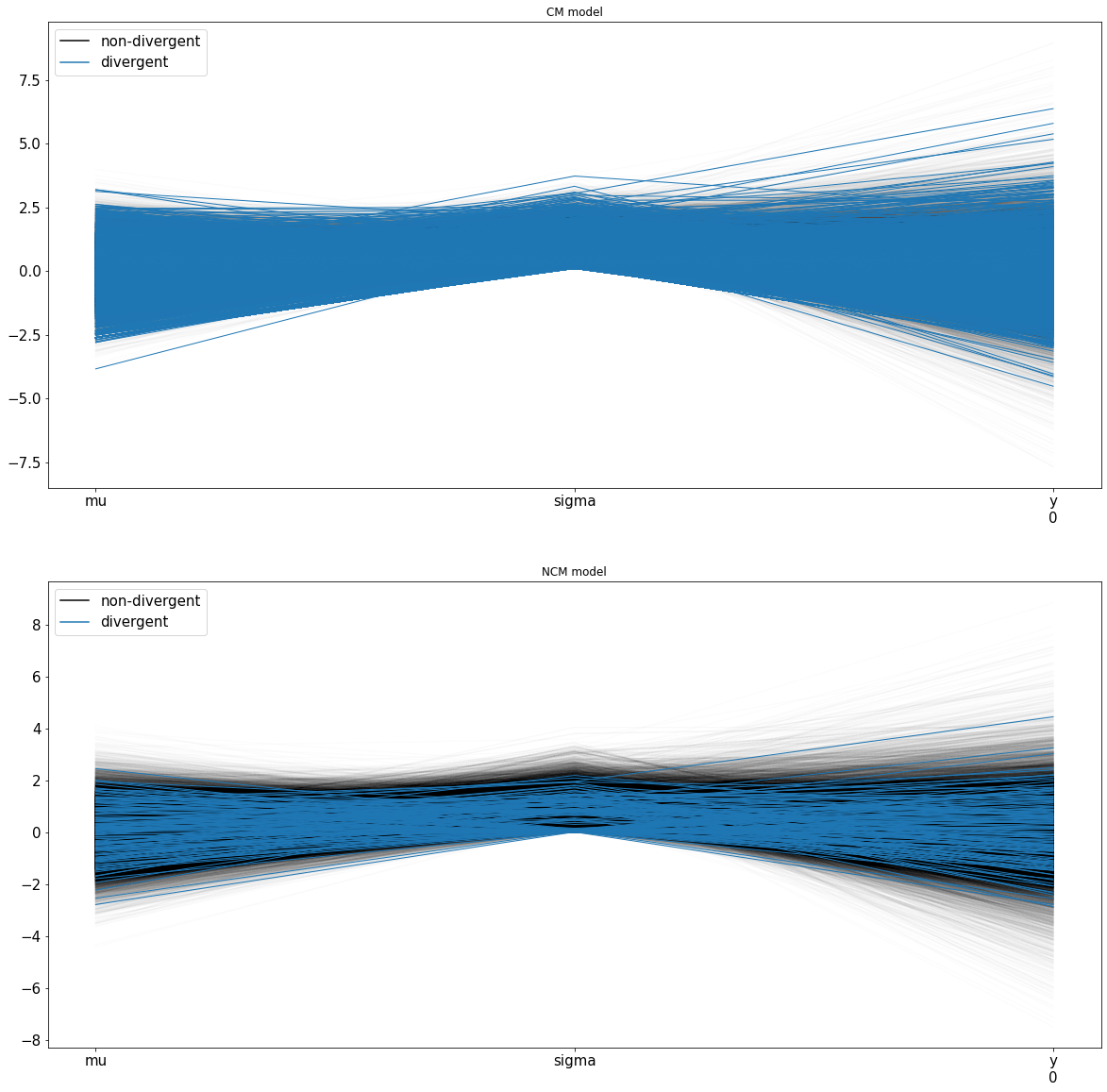

Sampling 4 chains, 119 divergences: 100%|██████████| 6000/6000 [00:34<00:00, 173.77draws/s]

There were 94 divergences after tuning. Increase `target_accept` or reparameterize.

There were 12 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.9296457057021434, but should be close to 0.8. Try to increase the number of tuning steps.

There were 9 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.9215539233466684, but should be close to 0.8. Try to increase the number of tuning steps.

There were 4 divergences after tuning. Increase `target_accept` or reparameterize.

The acceptance probability does not match the target. It is 0.9761114878843552, but should be close to 0.8. Try to increase the number of tuning steps.

The number of effective samples is smaller than 10% for some parameters.

Polynomial Regression for Nonlinear Data¶

What happens when the data is inherently nonlinear? It is more appropriate to use non-linear combinations of the inputs. This could be in the form of higher order terms such as \(x^2, x^3\) or it could use basis functions such as the cosine function, \(cos(x)\).

Data Generation¶

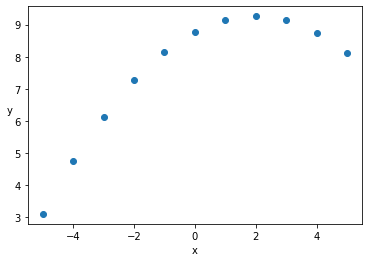

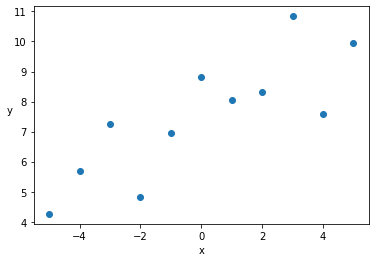

Use the values from the dataset in Anscombe’s quartet we used earlier as our non-linear data. We will use the regression model given by

x_1_centered = x_1 - x_1.mean()

plt.scatter(x_1_centered, y_1)

plt.xlabel('x')

plt.ylabel('y', rotation=0)

plt.figure()

x_0_centered = x_0 - x_0.mean()

plt.scatter(x_0_centered, y_0)

plt.xlabel('x')

plt.ylabel('y', rotation=0)

Text(0, 0.5, 'y')

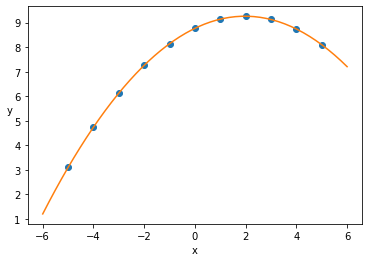

Inference on Data I¶

with pm.Model() as model_poly:

α = pm.Normal('α', mu=y_1.mean(), sd=1)

β1 = pm.Normal('β1', mu=0, sd=1)

β2 = pm.Normal('β2', mu=0, sd=1)

ϵ = pm.HalfCauchy('ϵ', 5)

mu = α + β1 * x_1_centered + β2 * x_1_centered**2

y_pred = pm.Normal('y_pred', mu=mu, sd=ϵ, observed=y_1)

trace = pm.sample(2000, tune=2000)

x_p = np.linspace(-6, 6)

y_p = trace['α'].mean() + trace['β1'].mean() * x_p + trace['β2'].mean() * x_p**2

plt.scatter(x_1_centered, y_1)

plt.xlabel('x')

plt.ylabel('y', rotation=0)

plt.plot(x_p, y_p, c='C1')

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ϵ, β2, β1, α]

Sampling 4 chains, 0 divergences: 100%|██████████| 16000/16000 [00:08<00:00, 1779.71draws/s]

[<matplotlib.lines.Line2D at 0x7fc300ba97f0>]

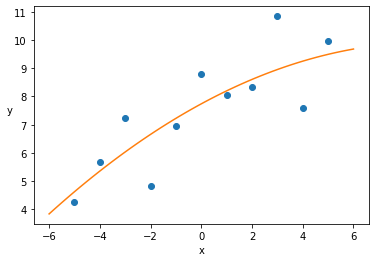

Inference on Data II¶

with pm.Model() as model_poly:

α = pm.Normal('α', mu=y_0.mean(), sd=1)

β1 = pm.Normal('β1', mu=0, sd=1)

β2 = pm.Normal('β2', mu=0, sd=1)

ϵ = pm.HalfCauchy('ϵ', 5)

mu = α + β1 * x_0_centered + β2 * x_0_centered**2

y_pred = pm.Normal('y_pred', mu=mu, sd=ϵ, observed=y_0)

trace = pm.sample(2000)

x_p = np.linspace(-6, 6)

y_p = trace['α'].mean() + trace['β1'].mean() * x_p + trace['β2'].mean() * x_p**2

plt.scatter(x_0_centered, y_0)

plt.xlabel('x')

plt.ylabel('y', rotation=0)

plt.plot(x_p, y_p, c='C1')

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ϵ, β2, β1, α]

Sampling 4 chains, 0 divergences: 100%|██████████| 10000/10000 [00:05<00:00, 1669.11draws/s]

[<matplotlib.lines.Line2D at 0x7fc2fd471e80>]

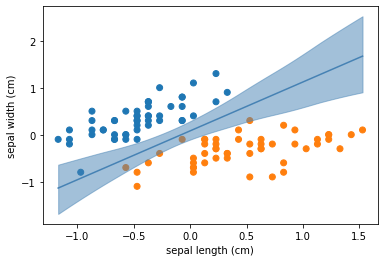

Multiple Linear Regression¶

In Multiple Linear Regression, there is more than one independent variable to predict the outcome of one dependent variable.

Data Generation¶

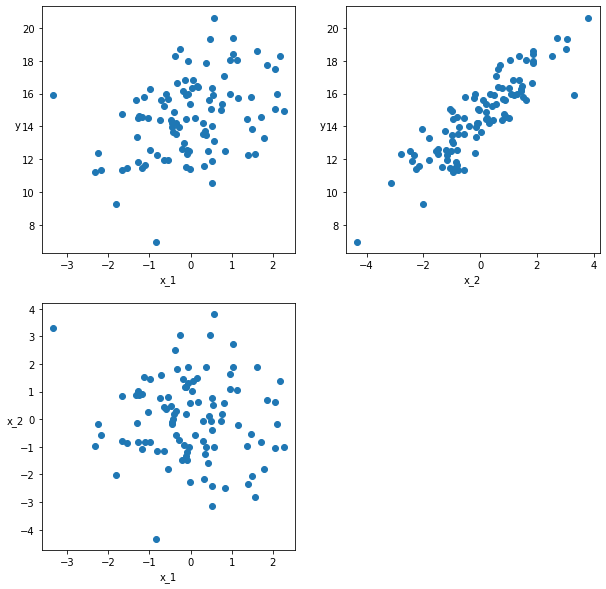

The example below generates two-dimensional data for X. It plots the variation of ‘y’ with each component of X in the top two figures. The bottom figure indicates the correlation of the two components of X.

np.random.seed(314)

# N is the total number of observations

N = 100

# m is 2, the number of independent variables

alpha_real = 2.5

beta_real = [0.9, 1.5]

eps_real = np.random.normal(0, 0.5, size=N)

# X is # n x m

X = np.array([np.random.normal(i, j, N) for i, j in zip([10, 2], [1, 1.5])]).T

X_mean = X.mean(axis=0, keepdims=True)

X_centered = X - X_mean

y = alpha_real + np.dot(X, beta_real) + eps_real

def scatter_plot(x, y):

plt.figure(figsize=(10, 10))

for idx, x_i in enumerate(x.T):

plt.subplot(2, 2, idx+1)

plt.scatter(x_i, y)

plt.xlabel(f'x_{idx+1}')

plt.ylabel(f'y', rotation=0)

plt.subplot(2, 2, idx+2)

plt.scatter(x[:, 0], x[:, 1])

plt.xlabel(f'x_{idx}')

plt.ylabel(f'x_{idx+1}', rotation=0)

scatter_plot(X_centered, y)

Inference¶

This code is very similar to what we have already seen, the only real difference being the dimensionality of the coefficients and the inputs. Something you would notice is that as the number of unknowns increase, the uncertainty associated with our inferences become larger. It is beneficial to have more accurate priors in this situation.

with pm.Model() as model_mlr:

α_tmp = pm.Normal('α_tmp', mu=2, sd=2) # Try changing the prior distribution

β = pm.Normal('β', mu=0, sd=5, shape=2) # Note the shape of beta

ϵ = pm.HalfCauchy('ϵ', 5)

μ = α_tmp + pm.math.dot(X_centered, β)

α = pm.Deterministic('α', α_tmp - pm.math.dot(X_mean, β))

y_pred = pm.Normal('y_pred', mu=μ, sd=ϵ, observed=y)

trace = pm.sample(2000, tune=1000)

az.summary(trace)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [ϵ, β, α_tmp]

Sampling 4 chains, 0 divergences: 100%|██████████| 12000/12000 [00:05<00:00, 2090.64draws/s]

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| α_tmp | 14.589 | 0.048 | 14.502 | 14.679 | 0.000 | 0.000 | 12793.0 | 12793.0 | 12773.0 | 6290.0 | 1.0 |

| β[0] | 0.971 | 0.044 | 0.885 | 1.050 | 0.000 | 0.000 | 10265.0 | 10248.0 | 10282.0 | 6003.0 | 1.0 |

| β[1] | 1.471 | 0.033 | 1.411 | 1.533 | 0.000 | 0.000 | 11240.0 | 11240.0 | 11270.0 | 5879.0 | 1.0 |

| ϵ | 0.474 | 0.035 | 0.412 | 0.545 | 0.000 | 0.000 | 10134.0 | 10104.0 | 10083.0 | 6060.0 | 1.0 |

| α[0] | 1.822 | 0.457 | 0.975 | 2.688 | 0.005 | 0.003 | 10166.0 | 9754.0 | 10210.0 | 6202.0 | 1.0 |

pm.model_to_graphviz(model_mlr)

Logistic Regression¶

While everything we have seen so far involved regression, the same ideas can be applied to a classification task as well. We use the logistic regression model to perform this classification here. The name ‘regression’ is due to the fact that the model outputs class probabilities as numbers which is then converted into classes using a decision boundary. There are many ways to select an appropriate decision boundary, a few of which were covered in Course 1 and Course 2.

Inverse Link function¶

At this point it is a good idea to bring up the concept of a inverse link function, which takes the form

\(\theta = f(\alpha + \beta x)\)

Here ‘f’ is called the inverse link function, the term inverse refers to the fact that the function is applied to the right hand side of the equation. In a linear regression, this inverse link function is the identity function. In the case of a linear regression model, the value ‘y’ at any point ‘x’ is modeled as the mean of a Gaussian distribution centered at the point (x,y). The error as a result of the true ‘y’ and the estimated ‘y’ are modeled with the standard deviation of this Gaussian at that point (x,y). Now think about the scenario where this is not appropriately modeled using a Gaussian. A classification problem is a perfect example of such a scenario where the discrete classes are not modeled well as a Gaussian and hence we can’t use this distribution to model the mean of those classes. As a result, we would like to convert the output of \(\alpha + \beta x\) to some other range of values that are more appropriate to the problem being modeled, which is what the link function intends to do.

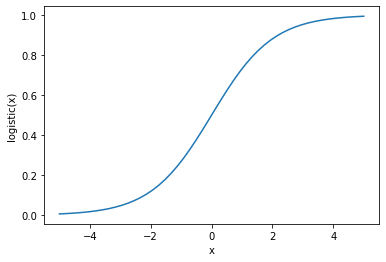

Logistic function¶

The logistic function is defined as the function

\(logistic(x) = \dfrac{1}{1 + \exp{(-x)}}\)

This is also called the sigmoid function and it restricts the value of the output to the range [0,1].

x = np.linspace(-5,5)

plt.plot(x, 1 / (1 + np.exp(-x)))

plt.xlabel('x')

plt.ylabel('logistic(x)')

Text(0, 0.5, 'logistic(x)')

Example using the Iris data¶

The simplest example using a logistic regression model is one that can be used to identify two classes. If you are given a set of independent variables that are features which correspond to an output dependent variable that is a class, you can build a model to learn the relationship between the features and the output classes. This is done with the help of the logistic function which acts as the inverse link function to relate the features to the output class.

\(\theta = logistic(\alpha + \beta x)\)

If it is a two-class problem (binary classification), the output variable can be represented by a Bernoulli distribution.

\(y \sim Bern(\theta)\)

The mean parameter \(\theta\) is now given by the regression equation \(logistic(\alpha + \beta x)\). In regular linear regression, this parameter was drawn from a Gaussian distribution. In the case of the coin-flip example the data likelihood was represented by a Bernoulli distribution, (the parameter \(\theta\) was drawn from a Beta prior distribution there), similarly we have output classes associated with every observation here.

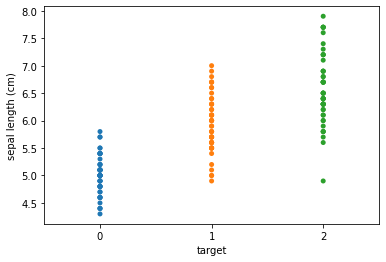

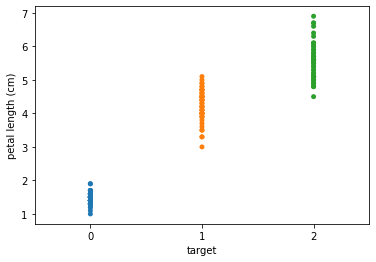

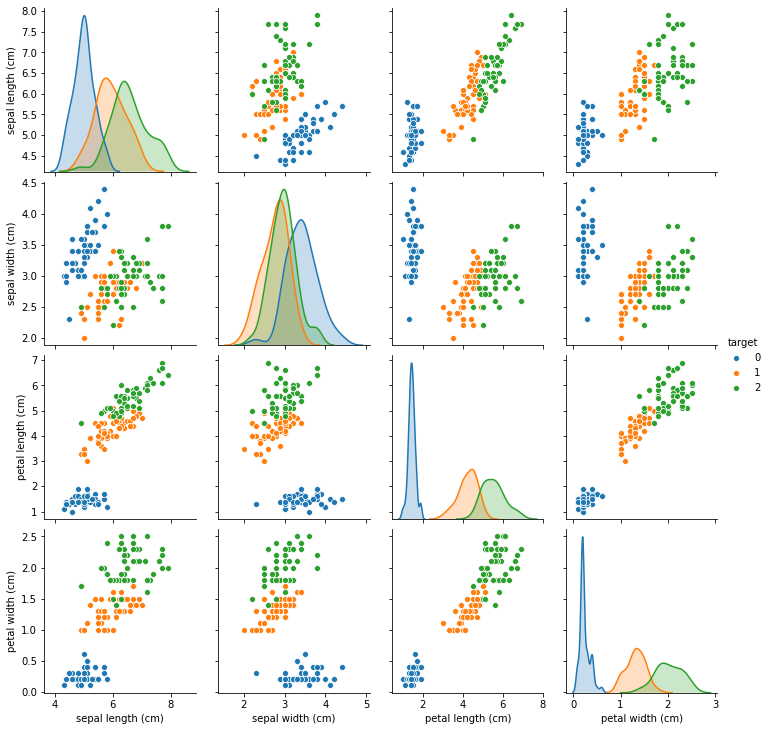

We load the iris data from scikit learn and

Plot the distribution of the three classes for two of the features.

We also perform a pairplot to visualize the correlation of each feature with every other feature. The diagonal of this plot shows the distribution of the three classes for that feature.

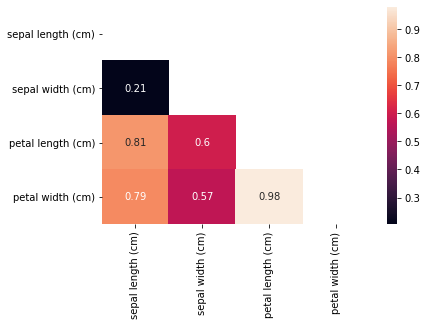

Correlation plot of just the features. This can be visually cleaner and cognitively simpler to comprehend.

import pymc3 as pm

import sklearn

import numpy as np

import graphviz

import pandas as pd

from matplotlib import pyplot as plt

import seaborn

from sklearn import datasets

df = datasets.load_iris()

iris_data = pd.DataFrame(df['data'], columns=df['feature_names'])

iris_data['target'] = df['target']

seaborn.stripplot(x='target', y='sepal length (cm)', data=iris_data, jitter=False)

plt.figure()

seaborn.stripplot(x='target', y='petal length (cm)', data=iris_data, jitter=False)

plt.figure()

seaborn.pairplot(iris_data, hue='target', diag_kind='kde')

plt.figure()

corr = iris_data.query("target == (0,1)").loc[:, iris_data.columns != 'target'].corr()

mask = np.tri(*corr.shape).T

seaborn.heatmap(corr.abs(), mask=mask, annot=True)

plt.show()

<Figure size 432x288 with 0 Axes>

You would notice that some of the variables have a high degree of correlation from the correlation plot. One approach is to eliminate one of the correlated variables. The second option is to mean-center and use a weakly-informative prior such as a Students t-distribution for all variables that are not binary. The scale parameter can be adjusted for the range of expected values for these variables and the normality parameter is recommended to be between 3 and 7. (Source: Andrew Gelman and the Stan team)

df['target_names']

array(['setosa', 'versicolor', 'virginica'], dtype='<U10')

Inference¶

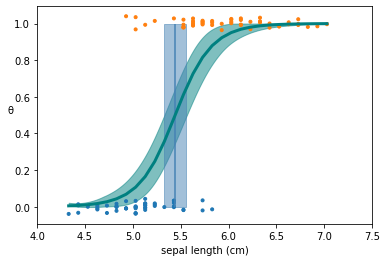

We use a single feature, the sepal length, to learn a decision boundary between the first two classes in the iris data (0,1).

In this case, the decision boundary is defined to be the value of ‘x’ when ‘y’ = 0.5. We won’t go over the derivation here, but this turns out to be \(-\alpha / \beta\). However, this value was chosen under the assumption that the midpoint of the class values are a good candidate for separating the classes, but this does not have to be the case.

# Select the first two classes for a binary classification problem

df = iris_data.query("target == (0,1)")

y_0 = df.target

x_n = 'sepal length (cm)'

x_0 = df[x_n].values

x_c = x_0 - x_0.mean()

import pymc3 as pm

import arviz as az

with pm.Model() as model_0:

α = pm.Normal('α', mu=0, sd=10)

β = pm.Normal('β', mu=0, sd=10)

μ = α + pm.math.dot(x_c, β)

θ = pm.Deterministic('θ', pm.math.sigmoid(μ))

bd = pm.Deterministic('bd', -α/β)

yl = pm.Bernoulli('yl', p=θ, observed=y_0)

trace_0 = pm.sample(1000)

pm.model_to_graphviz(model_0)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [β, α]

Sampling 4 chains, 0 divergences: 100%|██████████| 6000/6000 [00:03<00:00, 1717.46draws/s]

az.summary(trace_0, var_names=["α","β","bd"])

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| α | 0.313 | 0.338 | -0.295 | 0.977 | 0.006 | 0.005 | 2840.0 | 2158.0 | 2851.0 | 2198.0 | 1.0 |

| β | 5.401 | 1.044 | 3.501 | 7.359 | 0.019 | 0.014 | 3048.0 | 2843.0 | 3131.0 | 2400.0 | 1.0 |

| bd | -0.057 | 0.062 | -0.176 | 0.054 | 0.001 | 0.001 | 2901.0 | 2425.0 | 2915.0 | 2303.0 | 1.0 |

Visualizing the Decision Boundary¶

The classifier outputs, i.e. the y values are jittered to make it easier to visualize.

The solid green lines are the mean of the fitted \(\theta\) as a result of the sampling and inference process

The transparent green lines indicate the 94% HPD (default values) for the fitted \(\theta\).

The solid blue line indicates the decision boundary that is derived from the inferred values of the parameters using the equation \(\alpha/\beta\)

The transparent blue indicates the HPD (94%) for the decision boundary.

theta = trace_0['θ'].mean(axis=0)

idx = np.argsort(x_c)

# Plot the fitted theta

plt.plot(x_c[idx], theta[idx], color='teal', lw=3)

# Plot the HPD for the fitted theta

az.plot_hpd(x_c, trace_0['θ'], color='teal')

plt.xlabel(x_n)

plt.ylabel('θ', rotation=0)

# Plot the decision boundary

plt.vlines(trace_0['bd'].mean(), 0, 1, color='steelblue')

# Plot the HPD for the decision boundary

bd_hpd = az.hpd(trace_0['bd'])

plt.fill_betweenx([0, 1], bd_hpd[0], bd_hpd[1], color='steelblue', alpha=0.5)

plt.scatter(x_c, np.random.normal(y_0, 0.02),

marker='.', color=[f'C{x}' for x in y_0])

# use original scale for xticks

locs, _ = plt.xticks()

plt.xticks(locs, np.round(locs + x_0.mean(), 1))

([<matplotlib.axis.XTick at 0x7fc2e8ccf9a0>,

<matplotlib.axis.XTick at 0x7fc2e8ccf3a0>,

<matplotlib.axis.XTick at 0x7fc2e8e8e160>,

<matplotlib.axis.XTick at 0x7fc300aa44c0>,

<matplotlib.axis.XTick at 0x7fc2fe169b20>,

<matplotlib.axis.XTick at 0x7fc2e8d742e0>,

<matplotlib.axis.XTick at 0x7fc2e8d74220>,

<matplotlib.axis.XTick at 0x7fc2e8d44e20>],

[Text(0, 0, '4.0'),

Text(0, 0, '4.5'),

Text(0, 0, '5.0'),

Text(0, 0, '5.5'),

Text(0, 0, '6.0'),

Text(0, 0, '6.5'),

Text(0, 0, '7.0'),

Text(0, 0, '7.5')])

Multiple Logistic Regression¶